Understanding the intricate nature of light often begins with mastering measurement of wavelength. Spectroscopy, a powerful technique frequently employed by researchers at institutions like the National Institute of Standards and Technology (NIST), offers precise methods for characterizing electromagnetic radiation. Effective measurement of wavelength is crucial for applications ranging from materials science to telecommunications. Furthermore, tools like the Michelson interferometer have revolutionized the field, allowing scientists to explore light’s wave properties with unprecedented accuracy. The legacy of pioneering physicists like Albert Michelson continues to inspire advancements in the measurement of wavelength and its related technologies, solidifying its importance in scientific and technological progress. Understanding how to measure wavelength accurately is essential for anyone working with light and electromagnetic radiation.

Wavelength, a fundamental property of wave phenomena, serves as a critical lens through which we perceive and understand the universe. From the vibrant colors of a rainbow to the invisible signals that power our communication networks, wavelength measurement is the bedrock of countless scientific and technological advancements.

Its accurate determination unlocks insights into the nature of light, sound, and matter, driving innovation across diverse fields.

The Indispensable Role of Precise Measurement

In the realm of science and technology, precise wavelength measurement isn’t merely a desirable feature; it’s an absolute necessity. Minute variations in wavelength can signify critical differences in material composition, signal integrity, or even the behavior of celestial objects.

Consider the implications in medical diagnostics, where subtle spectral shifts can indicate the presence of disease, or in telecommunications, where precise wavelength control ensures the seamless transmission of data. The accuracy of these measurements directly impacts the reliability and efficacy of the technologies we rely on daily.

Without it, scientific inquiry would be hampered, and technological progress would grind to a halt.

Wavelength, Frequency, and the Speed of Light: An Intertwined Trio

The concept of wavelength is inextricably linked to two other fundamental properties of waves: frequency and the speed of light. These three parameters are related by a simple yet profound equation:

c = λν

Where:

- ‘c’ represents the speed of light (approximately 299,792,458 meters per second in a vacuum).

- ‘λ’ denotes the wavelength.

- ‘ν’ symbolizes the frequency.

This equation reveals an inverse relationship between wavelength and frequency: as wavelength increases, frequency decreases, and vice versa, while the speed of light remains constant. Understanding this relationship is crucial for manipulating and utilizing wave phenomena effectively.

For instance, in radio communications, different frequencies (and thus wavelengths) are assigned to different channels, ensuring that signals don’t interfere with one another.

A Historical Perspective: Wavelength’s Impact on Technological Evolution

The historical impact of wavelength understanding on technological progress is nothing short of revolutionary. From the early experiments on light diffraction by Fraunhofer to the development of laser technology, advancements in wavelength measurement have consistently paved the way for groundbreaking innovations.

The discovery of X-rays, for example, stemmed directly from understanding their short wavelengths, leading to transformative applications in medical imaging and materials science. Similarly, the ability to precisely control the wavelength of light emitted by lasers has enabled breakthroughs in fields ranging from optical data storage to precision manufacturing.

The story of wavelength measurement is, in essence, the story of scientific and technological progress itself, each advancement building upon previous discoveries to unlock new possibilities and reshape our understanding of the world.

The intimate connection between wavelength, frequency, and the speed of light highlights the elegance of wave physics. But understanding this relationship is only the first step in truly grasping the significance of wavelength. We now turn our attention to defining wavelength more formally, exploring the vast electromagnetic spectrum it populates, and introducing the units we use to quantify its magnitude.

Fundamentals of Wavelength: Definition, Spectrum, and Units

At its core, wavelength is the spatial period of a wave – the distance over which the wave’s shape repeats. It’s commonly denoted by the Greek letter lambda (λ). This fundamental property applies to all types of waves, from the familiar ripples in a pond to the more abstract electromagnetic radiation that permeates the universe.

Defining Wavelength in Wave Phenomena

More precisely, for a periodic wave, the wavelength is the distance between two consecutive points in phase, such as crest to crest or trough to trough. Imagine a sinusoidal wave traveling through space. The distance between successive peaks of that sine wave is its wavelength.

Wavelength is an intrinsic property of the wave itself. It’s determined by the source generating the wave and the medium through which the wave is traveling. The medium’s properties affect the wave’s speed, which, in turn, influences the wavelength for a given frequency.

The Electromagnetic Spectrum: A Wavelength Tapestry

The electromagnetic spectrum is a continuous range of all possible electromagnetic radiation frequencies. It is often described in terms of wavelength, as it directly corresponds to frequency.

This vast spectrum encompasses everything from extremely long radio waves to incredibly short gamma rays, each with distinct properties and applications.

Exploring the Spectrum’s Regions

-

Radio Waves: These have the longest wavelengths, ranging from millimeters to hundreds of kilometers. They are used in broadcasting, communication, and navigation.

-

Microwaves: Shorter than radio waves, microwaves are used in radar, satellite communication, and, of course, microwave ovens.

-

Infrared Radiation: We perceive infrared as heat. It’s used in thermal imaging, remote controls, and fiber optic communication.

-

Visible Light: This narrow band of the spectrum is what our eyes can detect, ranging from red (longest visible wavelength) to violet (shortest visible wavelength).

-

Ultraviolet Radiation: UV radiation has shorter wavelengths than visible light and can be harmful to living organisms. It’s used in sterilization and tanning beds.

-

X-rays: X-rays are highly energetic and can penetrate soft tissues, making them useful in medical imaging.

-

Gamma Rays: These have the shortest wavelengths and highest energy. They are produced by nuclear reactions and are used in cancer treatment.

Each region of the electromagnetic spectrum interacts with matter in different ways, depending on the wavelength. This interaction forms the basis for many technologies and scientific investigations.

Units of Wavelength Measurement: Nanometers and Angstroms

Because wavelengths can vary across such a vast range, scientists use different units to express their measurements conveniently.

-

Nanometer (nm): One nanometer is equal to one billionth of a meter (10-9 m). This unit is commonly used to measure wavelengths in the visible and ultraviolet regions of the spectrum, as well as in nanoscale technologies.

-

Angstrom (Å): One Angstrom is equal to 0.1 nanometers (10-10 m). It is a non-SI unit, but it is still frequently used in atomic physics, chemistry, and structural biology due to its convenient scale for expressing atomic dimensions and interatomic distances.

While the meter is the SI base unit for length, nanometers and Angstroms offer a more practical scale for dealing with the tiny wavelengths of light and other electromagnetic radiation.

Understanding these fundamental units allows for accurate communication and precise calculations in scientific research and technological development.

The intimate connection between wavelength, frequency, and the speed of light highlights the elegance of wave physics. But understanding this relationship is only the first step in truly grasping the significance of wavelength. We now turn our attention to defining wavelength more formally, exploring the vast electromagnetic spectrum it populates, and introducing the units we use to quantify its magnitude. Direct measurement of wavelength provides tangible access to these abstract concepts.

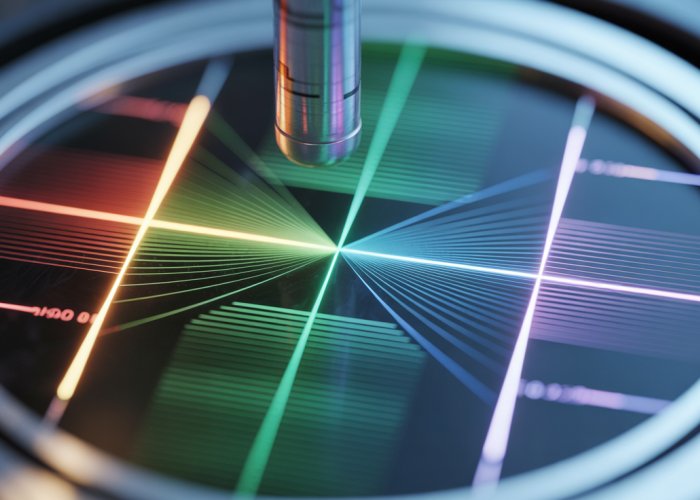

Direct Measurement Techniques: Spectrometers and Interferometers

Direct measurement techniques offer a practical way to determine wavelength. These methods rely on instruments that directly interact with the wave to reveal its properties. Among these, spectrometers and interferometers stand out as crucial tools. Spectrometers analyze the spectral composition of light, while interferometers use interference patterns to achieve extremely precise measurements. Let’s delve deeper into each of these techniques.

Spectrometer Analysis: Deconstructing Light

A spectrometer is an instrument designed to measure the properties of light over a specific portion of the electromagnetic spectrum. It achieves this by separating incoming light into its constituent wavelengths and then measuring the intensity of each wavelength. The core principle relies on dispersing light and quantifying its components.

Principle of Operation

At its heart, a spectrometer works by dispersing light using a prism or a diffraction grating. This dispersion separates the light into a spectrum of colors, each corresponding to a specific wavelength. A detector then measures the intensity of light at each wavelength. The result is a spectral graph that plots intensity as a function of wavelength, revealing the light source’s unique "fingerprint."

Types and Applications

Spectrometers come in various designs, each optimized for specific applications. Prism spectrometers use the refractive properties of a prism to separate light, whereas grating spectrometers use diffraction gratings. The choice depends on the required resolution and spectral range.

-

In chemistry, spectrometers are used for identifying substances by analyzing their absorption and emission spectra.

-

In astronomy, they help determine the composition of stars and galaxies by studying the light they emit.

-

In materials science, spectrometers are essential for characterizing the optical properties of materials.

Spectrometer Examples

-

UV-Vis Spectrometers: Used to analyze the absorption and transmission of UV and visible light through liquids or solids. Applications include chemical analysis and quality control.

-

Infrared (IR) Spectrometers: Measures the absorption of infrared radiation by molecules, providing information about their vibrational modes. Essential for identifying organic compounds.

-

Atomic Absorption Spectrometers: Used for quantitative determination of elements in a sample by measuring the absorption of light by free atoms. Used in environmental monitoring and food safety.

Interferometer Insights: Precision Through Interference

Interferometers offer an alternative approach to measuring wavelength, based on the principle of wave interference. By splitting a beam of light and then recombining it, interferometers create interference patterns that are highly sensitive to changes in the path length of the light beams. This sensitivity makes interferometers capable of extremely precise wavelength measurements.

The Michelson Interferometer

The Michelson interferometer is a classic example, renowned for its simplicity and versatility. It splits a beam of light into two paths, each reflected by a mirror. One mirror is fixed, while the other can be moved. When the beams recombine, they create an interference pattern. The changes in this pattern, as the movable mirror is adjusted, allow for precise determination of wavelength.

Measuring Wavelength with Interference Patterns

Interferometers measure wavelengths by analyzing the interference patterns produced when two or more beams of light are combined. The key is that the interference pattern’s characteristics are directly related to the wavelength of the light and the path length difference between the beams.

By carefully measuring the changes in the interference pattern as one of the mirrors is moved, it’s possible to determine the wavelength with exceptional accuracy. The resulting data allows scientists and engineers to precisely quantify wavelengths far beyond the capabilities of simpler spectroscopic techniques.

Practical Applications of Interferometers

The high precision of interferometers makes them invaluable in numerous fields.

-

They are used in fundamental physics research, such as the famous Michelson-Morley experiment, which sought to detect the luminiferous ether.

-

In metrology, interferometers are used for calibrating length standards and measuring distances with extreme accuracy.

-

In telecommunications, they are used for characterizing optical components and ensuring the performance of fiber optic networks.

Direct methods, such as those involving spectrometers and interferometers, give us one approach to measuring wavelength. However, wavelength can also be determined using indirect techniques. These methods rely on understanding how light interacts with specific structures or materials to infer its wavelength, and they provide alternative pathways for optical measurement and calibration.

Indirect Measurement Techniques: Diffraction Gratings and Laser References

While spectrometers and interferometers offer direct routes to wavelength measurement, indirect methods provide ingenious alternatives. These techniques leverage well-understood physical phenomena to infer wavelength, expanding our toolkit for optical analysis. Two prominent examples are the use of diffraction gratings and the application of lasers as calibration standards.

Diffraction Grating Demystified

Diffraction gratings are optical components with periodic structures that split and diffract light. They are essential for wavelength separation and are integral to many optical instruments.

How Diffraction Gratings Separate Light

The magic of a diffraction grating lies in its ability to separate polychromatic light into its constituent wavelengths. This separation occurs because each wavelength is diffracted at a slightly different angle.

The periodic structure of the grating, typically a series of closely spaced parallel grooves, causes interference patterns when light interacts with it. This results in the light being dispersed into different directions based on its wavelength. Shorter wavelengths are diffracted less than longer wavelengths, creating the familiar spectral separation.

The Grating Equation

The behavior of diffraction gratings is precisely described by the grating equation:

d sin(θ) = mλ

Where:

- d is the spacing between the grating’s grooves.

- θ is the angle of diffraction.

- m is the order of the diffraction (an integer representing the different interference maxima).

- λ is the wavelength of the light.

This equation is fundamental to understanding how diffraction gratings work. It allows us to calculate the wavelength of light if we know the grating spacing, the diffraction angle, and the order of the diffraction. By measuring these parameters, we can accurately determine the wavelength of the incident light.

Applications in Optical Instruments

Diffraction gratings are found in a wide array of optical instruments. They are used in spectrometers as the dispersing element, replacing or complementing prisms.

They’re present in monochromators to select narrow bands of wavelengths, and are critical in various spectroscopic techniques, from Raman spectroscopy to UV-Vis spectroscopy, where precise wavelength determination is essential for sample analysis. Their versatility and effectiveness make them indispensable tools for optical analysis.

Laser Calibration

Lasers provide another indirect but highly accurate method for wavelength determination and instrument calibration.

Lasers as Stable Light Sources

Lasers are known for emitting highly stable and monochromatic light. This means that the light produced by a laser consists of a very narrow range of wavelengths, making them ideal reference sources.

The precise and well-defined wavelength of laser light allows for the accurate calibration of optical instruments. Unlike traditional light sources that emit a broad spectrum of wavelengths, lasers provide a single, well-defined wavelength that can be used as a benchmark.

Calibrating Optical Instruments

Laser calibration is a cornerstone of ensuring the accuracy and reliability of optical instruments. By directing laser light of a known wavelength into an instrument, any deviations in the instrument’s readings can be identified and corrected.

This is particularly important for instruments like spectrometers, where accurate wavelength determination is crucial for interpreting spectral data. Laser calibration helps to minimize systematic errors and ensure that the instrument provides accurate and consistent measurements. The stability and monochromaticity of laser light make them invaluable for establishing traceable standards in optical metrology.

Indirect methods, such as diffraction gratings and laser calibration, greatly expand our ability to determine wavelength. However, achieving truly accurate wavelength measurements isn’t simply a matter of selecting the right technique. The environment in which we measure, and the inherent limitations of our instruments, can significantly impact the results.

Factors Affecting Measurement Accuracy: Environmental and Instrumental

The quest for precise wavelength measurement is a delicate one, susceptible to a variety of influences that can skew results. These factors, stemming from both the surrounding environment and the measuring instruments themselves, must be carefully considered and mitigated to ensure accuracy. Understanding these potential pitfalls is crucial for reliable spectroscopic analysis and optical calibration.

Environmental Influences on Wavelength Measurement

The surrounding environment plays a surprisingly significant role in the accuracy of wavelength measurements. Factors like temperature, pressure, and humidity can all introduce subtle but impactful distortions.

Temperature’s Thermal Expansion Effects

Temperature variations, for instance, can cause thermal expansion or contraction of optical components within an instrument, altering the path length of light and thus affecting wavelength readings. Maintaining a stable temperature is therefore essential for precise measurements.

Pressure and Density of Air

Pressure changes affect the density of air, which in turn alters its refractive index. This is particularly relevant when measuring wavelengths in air, as variations in refractive index will directly influence the speed and therefore the apparent wavelength of light.

Humidity and Absorption

Similarly, humidity can introduce errors due to water vapor absorption of light at specific wavelengths, leading to skewed intensity measurements. Controlling humidity levels or compensating for its effects is necessary in certain spectral regions.

Instrumental Errors: A Deep Dive

Beyond environmental factors, the instruments themselves are subject to a range of potential errors that can compromise accuracy. These errors can arise from manufacturing imperfections, calibration issues, or limitations in the instrument’s design.

Calibration Imperfections and Zero Errors

Calibration errors are a common source of inaccuracy. If the instrument is not properly calibrated against a known standard, its readings will be systematically offset.

Zero errors, where the instrument provides a non-zero reading even in the absence of a signal, can also introduce significant inaccuracies, especially when measuring weak signals.

Resolution Limits and Signal-to-Noise Ratio

The resolution of the instrument, or its ability to distinguish between closely spaced wavelengths, limits the precision of measurement. Instruments with lower resolution will struggle to accurately resolve narrow spectral features.

The signal-to-noise ratio (SNR) also plays a critical role. High levels of noise can obscure the true signal, making it difficult to determine the precise wavelength of a spectral line.

Detector Non-Linearity

Furthermore, detectors used in wavelength measurement systems can exhibit non-linear behavior, where their response is not directly proportional to the intensity of light. This can lead to inaccurate measurements, particularly at high or low light levels.

The Refractive Index: A Crucial Parameter

The refractive index of the medium through which light travels is a critical parameter in wavelength measurement. The refractive index describes how much the speed of light is reduced in a particular medium compared to its speed in a vacuum.

Its Impact on Wavelength

Since wavelength is inversely proportional to the refractive index, accurate knowledge of the refractive index is essential for converting between wavelengths measured in different media.

Dispersion and Correction

Furthermore, the refractive index can vary with wavelength – a phenomenon known as dispersion. This means that different wavelengths of light will be refracted differently by a given medium, which can introduce errors if not properly accounted for. Accurate knowledge of the refractive index and its dependence on wavelength is crucial for correcting these effects and ensuring accurate measurements.

In conclusion, achieving precise wavelength measurements requires careful attention to both environmental conditions and instrumental limitations. By understanding and mitigating the effects of temperature, pressure, humidity, calibration errors, resolution limits, and the refractive index, we can significantly improve the accuracy and reliability of our optical analyses.

Applications of Wavelength Measurement: Spectroscopy and Material Identification

The ability to precisely measure wavelength isn’t merely an academic exercise; it’s a cornerstone of numerous scientific and technological applications. Among the most impactful is its pivotal role in spectroscopy, a technique that unveils the very composition of matter. Beyond spectroscopy, the broader utilization of the electromagnetic spectrum empowers us to identify materials based on their unique interactions with light.

Spectroscopy: Unlocking Material Composition Through Light

Spectroscopy, at its core, is the study of how matter interacts with electromagnetic radiation. By analyzing the wavelengths of light absorbed, emitted, or scattered by a substance, scientists can determine its elemental and molecular composition. This powerful tool has revolutionized fields ranging from chemistry and astronomy to environmental science and medicine.

The Principles of Spectroscopic Analysis

The foundation of spectroscopy rests on the principle that each element and molecule possesses a unique set of energy levels. When light interacts with a sample, specific wavelengths corresponding to the energy differences between these levels are absorbed. The resulting absorption spectrum acts as a fingerprint, revealing the presence and concentration of various constituents within the material.

Types of Spectroscopy

Numerous spectroscopic techniques exist, each tailored to specific applications and wavelength ranges. Atomic absorption spectroscopy (AAS) is widely used for determining the concentration of specific elements in a sample. Infrared (IR) spectroscopy probes the vibrational modes of molecules, providing insights into their structure and bonding. Ultraviolet-visible (UV-Vis) spectroscopy examines electronic transitions, revealing information about the electronic structure of molecules and materials.

Applications Across Disciplines

The versatility of spectroscopy is evident in its widespread adoption across diverse fields. In chemistry, it’s a fundamental tool for identifying and quantifying compounds. Astronomers use spectroscopy to analyze the light from distant stars and galaxies, determining their elemental composition, temperature, and velocity. In environmental science, spectroscopy is used to monitor air and water quality, detecting pollutants and contaminants. The medical field leverages spectroscopy for non-invasive diagnostics, such as analyzing blood samples to detect diseases.

Material Identification Through the Electromagnetic Spectrum

Beyond the targeted analysis offered by spectroscopy, the broader electromagnetic spectrum offers a diverse toolkit for material identification. Different regions of the spectrum interact with matter in distinct ways, providing a wealth of information about a material’s properties.

Harnessing Different Wavelengths

Radio waves, microwaves, infrared radiation, visible light, ultraviolet light, X-rays, and gamma rays—each wavelength range provides unique insights. For example, X-rays are used in radiography to visualize the internal structure of objects, while infrared thermography reveals temperature variations by detecting emitted infrared radiation.

Reflectance and Absorption Patterns

The way a material reflects or absorbs light across the electromagnetic spectrum is a unique characteristic. This principle is used in remote sensing, where satellite-based sensors measure the reflectance of different surfaces to identify vegetation types, mineral deposits, and other features. Similarly, the color of an object is determined by the wavelengths of visible light it reflects.

Material-Specific Signatures

Just as each person has a unique fingerprint, each material possesses a unique spectral signature. By analyzing how a material interacts with different wavelengths of electromagnetic radiation, we can identify it with a high degree of certainty. This approach is used in quality control, security screening, and countless other applications where rapid and accurate material identification is essential.

Master Wavelength: Frequently Asked Questions

This FAQ addresses common questions about wavelength measurement techniques discussed in "Master Wavelength: Measurement Techniques You Need To Know."

What are the most common methods for measuring wavelength?

The most common techniques include using spectrometers, interferometers, and diffraction gratings. Each method offers different advantages depending on the specific wavelength range and required precision. These techniques contribute to accurate measurement of wavelength.

How does a spectrometer measure wavelength?

A spectrometer separates light into its component wavelengths, then measures the intensity of each wavelength. This allows for the determination of the spectral composition of the light source and therefore the measurement of wavelength.

What is an interferometer, and how is it used in wavelength measurement?

An interferometer uses the principle of interference to measure distances, which can then be related to wavelength. By splitting a light beam and recombining it after different path lengths, interference patterns are created that allow for precise measurement of wavelength. This is a valuable tool for measurement of wavelength.

When would I choose a diffraction grating over other wavelength measurement methods?

Diffraction gratings are useful for measuring a broad spectrum of wavelengths simultaneously. They are commonly used in spectrometers and are well-suited for analyzing light sources with multiple spectral components, providing accurate measurement of wavelength across the spectrum.

Hopefully, you now have a clearer grasp of how to approach measurement of wavelength! Go out there and put these techniques to the test, and don’t be afraid to experiment. Happy measuring!