Understanding the principles of statistics is paramount when analyzing data, and one crucial concept in statistical analysis is variance between groups. Examining ANOVA (Analysis of Variance) as a methodology provides a structured approach to dissecting differences in means across multiple populations. The scientific community values interpretations of data which reduces error rate and strengthens validity for any conclusion made in research. The practical application of these methods, particularly as taught and promoted by experts like Ronald Fisher, provides critical insights when exploring variance between groups.

Understanding the Fundamentals of Variance

Before delving into the complexities of comparing group differences, it’s essential to establish a firm grasp on the foundational concept of variance itself. Understanding variance is the key to unlocking deeper insights in statistical analysis and interpreting the results of tests like ANOVA.

Defining Variance: Measuring Data Dispersion

At its core, variance is a statistical measure that quantifies the spread or dispersion of data points within a dataset.

It essentially tells us how much individual data points deviate from the average, or mean, of the entire dataset.

A high variance indicates that the data points are widely scattered, while a low variance suggests that they are clustered closely around the mean.

The basic calculation of variance involves several steps:

-

Calculate the mean (average) of the dataset.

-

For each data point, find the difference between that data point and the mean.

-

Square each of these differences. Squaring ensures all values are positive and amplifies larger deviations.

-

Sum up all the squared differences.

-

Divide the sum of squared differences by the number of data points (for population variance) or by the number of data points minus 1 (for sample variance). The latter is used to provide an unbiased estimate of the population variance.

The result is the variance, often denoted by σ² (sigma squared) for population variance or s² for sample variance. The square root of the variance is the standard deviation.

Variance Between Groups: Comparing Different Populations

Variance between groups, also sometimes called "between-group variability," specifically refers to the variability observed across different populations or samples being compared.

It assesses the extent to which the means of these groups differ from each other.

Imagine comparing the test scores of students from three different schools. The variance between groups would reflect how much the average test scores differ from school to school.

A high variance between groups suggests that the group means are quite different. This could indicate that the factor differentiating the groups (e.g., school, treatment, etc.) has a substantial effect on the outcome being measured.

Conversely, a low variance between groups indicates that the group means are similar.

Variance Between vs. Within Groups: The Key Distinction

Understanding the difference between variance between groups and variance within groups is crucial for interpreting statistical analyses.

Variance within groups (also called "within-group variability" or "error variance") represents the variability of data points within each individual group. It reflects the random variation or individual differences that exist within each group, irrespective of any differences between the groups.

For example, even within a single school, students will have varying test scores. This variation is the variance within the school group.

The key distinction is this: variance between groups looks at the differences among the group means, while variance within groups looks at the spread of data within each group individually.

To illustrate further, consider an experiment testing the effectiveness of three different fertilizers on plant growth.

-

Variance Between Groups: This would measure how much the average plant height differs among the three fertilizer groups. If fertilizer A leads to significantly taller plants on average than fertilizers B and C, the variance between groups will be high.

-

Variance Within Groups: This would measure how much the plant heights vary within each fertilizer group. Even if all plants received fertilizer A, there would still be some variation in their heights due to other factors like sunlight, water, or individual plant genetics.

Numerical Example: Calculating Variance Between and Within

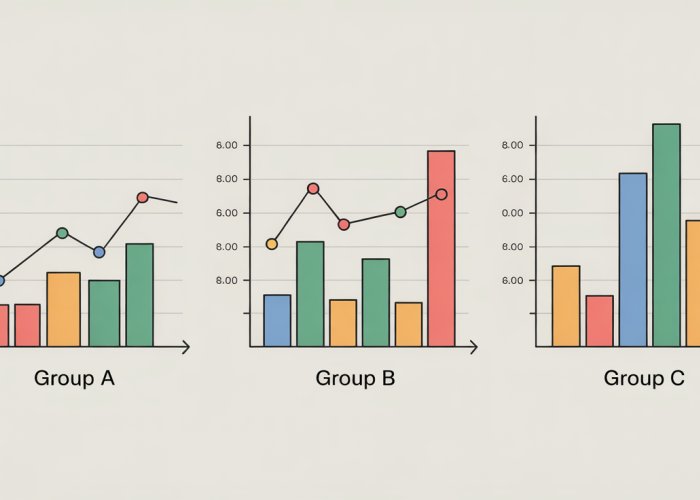

Let’s consider a simplified numerical example to illustrate the calculation of variance between and within groups.

Suppose we have three groups, each with three data points:

- Group 1: 2, 4, 6

- Group 2: 7, 9, 11

- Group 3: 12, 14, 16

First, we calculate the mean for each group:

- Mean of Group 1: (2 + 4 + 6) / 3 = 4

- Mean of Group 2: (7 + 9 + 11) / 3 = 9

- Mean of Group 3: (12 + 14 + 16) / 3 = 14

Next, we calculate the grand mean, which is the mean of all data points combined: (2+4+6+7+9+11+12+14+16)/9 = 9

Now, let’s calculate the variance between groups. This involves looking at how much each group’s mean differs from the grand mean:

-

Calculate the squared difference between each group mean and the grand mean:

- (4 – 9)² = 25

- (9 – 9)² = 0

- (14 – 9)² = 25

-

Multiply each squared difference by the number of data points in that group (in this case, 3):

- 25

**3 = 75

- 0** 3 = 0

- 25 * 3 = 75

- 25

-

Sum these values: 75 + 0 + 75 = 150

-

Divide by the degrees of freedom (number of groups – 1 = 3 – 1 = 2): 150 / 2 = 75

Therefore, the variance between groups is 75.

To calculate the variance within groups, we calculate the variance within each group separately and then average them.

-

Group 1 Variance:

- (2-4)² + (4-4)² + (6-4)² = 4 + 0 + 4 = 8

- 8 / (3-1) = 4

-

Group 2 Variance:

- (7-9)² + (9-9)² + (11-9)² = 4 + 0 + 4 = 8

- 8 / (3-1) = 4

-

Group 3 Variance:

- (12-14)² + (14-14)² + (16-14)² = 4 + 0 + 4 = 8

- 8 / (3-1) = 4

Since the variance within each group is the same (4), the average variance within groups is also 4.

In this example, the variance between groups (75) is much larger than the variance within groups (4), suggesting that there are substantial differences between the group means. The differences between the groups dwarfs the amount of variation within each group.

This foundational understanding of variance, both between and within groups, sets the stage for exploring more advanced statistical techniques, such as ANOVA, which are designed to analyze these variances and draw meaningful conclusions.

Variance between groups reveals fundamental differences, but how do we rigorously test if those differences are statistically significant? The Analysis of Variance, or ANOVA, steps in as a powerful and versatile tool specifically designed for this purpose. It allows us to move beyond simple observation and make informed judgments about the relationships between group means.

ANOVA: A Powerful Tool for Analyzing Variance

ANOVA (Analysis of Variance) is a statistical method used to analyze the differences between the means of two or more groups. It’s particularly useful when you want to compare several groups simultaneously, or when you have a single independent variable with multiple levels.

Why ANOVA Over Multiple T-Tests?

Why use ANOVA instead of running multiple t-tests to compare all possible pairs of groups? The answer lies in the control of the Type I error rate, or the probability of falsely rejecting the null hypothesis (concluding there’s a significant difference when there isn’t one).

Each t-test carries a certain risk of a Type I error (typically set at 5%, or 0.05). When you perform multiple t-tests, this error rate accumulates, increasing the overall probability of making at least one false positive conclusion. ANOVA, on the other hand, controls for this inflated error rate, maintaining the desired alpha level across all comparisons.

Essentially, ANOVA provides a more accurate and reliable assessment of group differences when dealing with more than two groups.

Key Assumptions of ANOVA

Like any statistical test, ANOVA relies on certain assumptions about the data. Violating these assumptions can compromise the validity of the results. The core assumptions of ANOVA are:

-

Normality: The data within each group should be approximately normally distributed. This means the distribution of scores in each group resembles the bell curve.

-

Homogeneity of Variance: The variance (spread) of the data should be roughly equal across all groups. This assumption ensures that no single group unduly influences the overall analysis.

-

Independence of Observations: The observations within each group, and between groups, should be independent of each other. This means that one participant’s score should not influence another participant’s score.

Addressing Violations of Assumptions

What happens if these assumptions are not met? Several strategies can be employed:

-

Normality: If the data are not normally distributed, consider data transformations (e.g., logarithmic transformation) or non-parametric alternatives to ANOVA, such as the Kruskal-Wallis test.

-

Homogeneity of Variance: If the variances are unequal, Welch’s ANOVA provides a robust alternative that does not assume equal variances.

-

Independence: Violations of independence are more challenging to address and often require more sophisticated statistical techniques or a redesign of the study.

The F-Statistic: Unveiling Group Differences

The heart of ANOVA lies in the F-statistic. The F-statistic is a ratio of the variance between groups to the variance within groups.

F = Variance Between Groups / Variance Within Groups

-

Variance Between Groups: This represents the variability in the data that is attributable to the differences between the group means. A larger variance between groups suggests that the group means are more different from each other.

-

Variance Within Groups: This represents the variability in the data that is not attributable to group differences but rather to individual differences or random error within each group.

A large F-statistic indicates that the variance between groups is substantially larger than the variance within groups. This provides evidence that the group means are significantly different. Conversely, a small F-statistic suggests that the group means are not significantly different.

Degrees of Freedom: The Foundation of Statistical Significance

Degrees of freedom (df) are crucial for determining the statistical significance of the F-statistic. They represent the number of independent pieces of information available to estimate a parameter.

In ANOVA, there are two types of degrees of freedom:

-

Degrees of Freedom Numerator (dfbetween): This is associated with the variance between groups and is calculated as the number of groups minus 1 (k – 1, where k is the number of groups).

-

Degrees of Freedom Denominator (dfwithin): This is associated with the variance within groups and is calculated as the total number of observations minus the number of groups (N – k, where N is the total sample size).

These degrees of freedom, along with the F-statistic, are used to determine the p-value. The p-value indicates the probability of observing the obtained results (or more extreme results) if the null hypothesis were true. A small p-value (typically less than 0.05) provides evidence to reject the null hypothesis and conclude that there is a significant difference between at least two of the group means.

Variance between groups reveals fundamental differences, but how do we rigorously test if those differences are statistically significant? The Analysis of Variance, or ANOVA, steps in as a powerful and versatile tool specifically designed for this purpose. It allows us to move beyond simple observation and make informed judgments about the relationships between group means.

Hypothesis Testing with ANOVA: A Step-by-Step Guide

ANOVA provides the framework, but to truly understand our findings, we must delve into the realm of hypothesis testing. This process provides a structured approach for drawing conclusions from data, allowing us to determine whether the observed differences between group means are likely due to a real effect or simply random chance. Let’s explore the steps involved in hypothesis testing using ANOVA.

Formulating the Null Hypothesis

The first step in hypothesis testing is to state the null hypothesis. In the context of ANOVA, the null hypothesis (often denoted as H0) posits that there is no significant difference between the means of the groups being compared.

Essentially, it assumes that all groups are drawn from populations with the same mean.

For instance, if we are comparing the effectiveness of three different teaching methods, the null hypothesis would state that the average performance of students is the same regardless of which teaching method they receive.

Defining the Alternative Hypothesis

The alternative hypothesis (H1 or Ha) directly contradicts the null hypothesis. It proposes that there is a significant difference between at least two of the group means.

This does not necessarily mean that all group means are different. It simply implies that there is at least one pair of groups whose means are statistically different from each other.

In our teaching methods example, the alternative hypothesis would suggest that at least one teaching method leads to significantly different average student performance compared to one of the other methods.

Interpreting the P-Value and Making Decisions

The p-value is a crucial output of ANOVA. It represents the probability of observing the obtained results (or more extreme results) if the null hypothesis were true.

In simpler terms, it quantifies the strength of evidence against the null hypothesis. A small p-value suggests strong evidence against the null hypothesis, while a large p-value suggests weak evidence.

A pre-determined significance level (alpha), commonly set at 0.05, is used as a threshold.

- If the p-value is less than the significance level (p < 0.05), we reject the null hypothesis. This suggests that the observed differences between group means are statistically significant and unlikely to be due to random chance.

- Conversely, if the p-value is greater than the significance level (p > 0.05), we fail to reject the null hypothesis. This means that we do not have enough evidence to conclude that there are significant differences between the group means.

It’s important to remember that failing to reject the null hypothesis does not prove it is true, it simply means we lack sufficient evidence to reject it.

When to Use a T-Test Instead of ANOVA

While ANOVA is ideal for comparing three or more groups, a t-test is more appropriate when you want to compare the means of only two groups. T-tests are specifically designed for this pairwise comparison.

There are two main types of t-tests:

- Independent samples t-test: Used when comparing the means of two independent groups (e.g., comparing the test scores of students in two different schools).

- Paired samples t-test: Used when comparing the means of two related groups or measurements (e.g., comparing the blood pressure of patients before and after taking a medication).

In essence, when the research question involves comparing only two groups, a t-test offers a more focused and efficient approach compared to ANOVA.

Variance between groups reveals fundamental differences, but how do we rigorously test if those differences are statistically significant? The Analysis of Variance, or ANOVA, steps in as a powerful and versatile tool specifically designed for this purpose. It allows us to move beyond simple observation and make informed judgments about the relationships between group means.

ANOVA provides the framework, but to truly understand our findings, we must delve into the realm of hypothesis testing. This process provides a structured approach for drawing conclusions from data, allowing us to determine whether the observed differences between group means are likely due to a real effect or simply random chance. Yet, the pursuit of knowledge doesn’t end with a statistically significant p-value.

Beyond Statistical Significance: Effect Size and Assessing Assumptions

While the p-value serves as a gatekeeper, indicating whether our results are statistically significant, it often masks a crucial element: the magnitude of the observed effect. Focusing solely on the p-value can paint an incomplete, even misleading, picture of our findings.

The Pitfalls of P-Value Obsession

A statistically significant p-value (typically p < 0.05) simply tells us that the observed result is unlikely to have occurred by chance alone, assuming the null hypothesis is true. However, it says nothing about the practical importance or the size of the effect.

In situations with large sample sizes, even trivial differences between group means can yield statistically significant p-values. Conversely, meaningful and potentially important effects might be overlooked in studies with smaller sample sizes if they fail to reach the conventional significance threshold.

Therefore, relying solely on the p-value can lead to overemphasizing small, statistically significant effects while simultaneously ignoring larger, non-significant ones. This is why it is essential to use these statistics as guidelines and not facts.

Effect Size: Quantifying Practical Significance

Effect size measures provide a standardized way to quantify the magnitude of the effect, independent of sample size. Unlike the p-value, effect size focuses on the practical importance of the finding.

Several effect size measures are commonly used in conjunction with ANOVA, each providing a slightly different perspective on the magnitude of the observed effect. Here are a few examples:

-

Cohen’s d: Primarily used when comparing two group means, Cohen’s d expresses the difference between the means in terms of standard deviation units. Values of 0.2, 0.5, and 0.8 are often interpreted as small, medium, and large effects, respectively, but these interpretations should be considered within the specific context of the research area.

-

Eta-squared (η²): Represents the proportion of variance in the dependent variable that is explained by the independent variable (group membership). It ranges from 0 to 1, with higher values indicating a larger proportion of variance explained. However, eta-squared tends to overestimate the population effect size.

-

Partial Eta-squared (ηp²): A modified version of eta-squared that is often preferred in more complex ANOVA designs. It represents the proportion of variance in the dependent variable not attributable to other factors that is explained by the independent variable.

Interpreting effect sizes requires careful consideration of the specific research context, the nature of the variables being studied, and the practical implications of the findings. What constitutes a "small" or "large" effect is often domain-specific.

Assessing Assumptions: Levene’s Test and Homogeneity of Variance

ANOVA relies on certain assumptions about the data, one of the most critical being the homogeneity of variance. This assumption states that the variance within each group being compared should be approximately equal. Violating this assumption can lead to inaccurate p-values and potentially flawed conclusions.

Levene’s test is a statistical test used to assess the homogeneity of variance. It tests the null hypothesis that the variances of all groups are equal.

If Levene’s test is not significant (p > 0.05), we can assume that the assumption of homogeneity of variance is met, and the standard ANOVA results are considered valid.

However, if Levene’s test is significant (p < 0.05), it suggests that the variances are significantly different across groups, violating the homogeneity of variance assumption.

When Levene’s test indicates a violation of the assumption, several alternative approaches can be considered. One common solution is to use Welch’s ANOVA, a modified version of ANOVA that does not assume equal variances.

Welch’s ANOVA provides a more robust test when the homogeneity of variance assumption is violated. Another approach might involve data transformations to stabilize the variances, but such transformations should be applied cautiously and with careful consideration of their potential impact on the interpretability of the results.

Real-World Applications of Variance Between Groups Analysis

The true power of ANOVA and the analysis of variance between groups lies not just in its theoretical underpinnings, but in its practical application across diverse fields. By examining real-world case studies, we can appreciate how this statistical technique informs decision-making, drives innovation, and ultimately leads to improved outcomes.

Healthcare: Comparing Treatment Effectiveness

In healthcare, ANOVA is frequently employed to compare the effectiveness of different treatments or interventions. Consider a clinical trial examining the efficacy of three different drugs for managing hypertension.

Researchers would divide participants into three groups, each receiving one of the drugs.

After a specified period, they would measure blood pressure levels in each group.

ANOVA would then be used to determine if there are statistically significant differences in mean blood pressure reduction among the three groups.

If a significant difference is found, post-hoc tests could be used to determine which specific drug(s) are most effective.

Beyond drug trials, ANOVA can also be used to assess the effectiveness of different surgical techniques, rehabilitation programs, or public health initiatives.

The insights gained from these analyses can inform clinical practice guidelines and ultimately improve patient care.

Education: Evaluating Teaching Methods

The field of education also benefits greatly from variance between groups analysis. For example, a school district might want to evaluate the effectiveness of different teaching methods for reading comprehension.

Students could be randomly assigned to one of several groups, each receiving a different instructional approach.

At the end of the semester, a standardized reading comprehension test would be administered.

ANOVA would then be used to compare the mean test scores across the different groups.

If significant differences are observed, it would suggest that certain teaching methods are more effective than others.

This information could then be used to inform curriculum development and teacher training programs.

Moreover, ANOVA can be applied to assess the impact of various educational interventions, such as after-school programs, tutoring services, or technology integration initiatives.

Marketing: Assessing Advertising Campaign Performance

In the world of marketing, ANOVA is a valuable tool for assessing the performance of different advertising campaigns. A company might launch three different versions of an online advertisement and then track click-through rates for each version.

By using ANOVA to compare the mean click-through rates across the three ad versions, marketers can determine which ad is most effective at attracting customer interest.

Similarly, ANOVA can be used to analyze the impact of different marketing strategies on sales, brand awareness, or customer satisfaction.

For instance, a company might implement different pricing strategies in different regions and then use ANOVA to compare the resulting sales figures.

The insights gleaned from these analyses can inform advertising campaign design, resource allocation, and overall marketing strategy.

Social Sciences: Exploring Social Phenomena

The social sciences often rely on ANOVA to explore complex social phenomena. For instance, researchers might want to investigate the relationship between socioeconomic status and academic achievement.

They could divide participants into different socioeconomic groups (e.g., low, middle, high) and then compare their mean academic performance scores.

ANOVA would allow them to determine if there are statistically significant differences in academic achievement across the different socioeconomic groups.

Likewise, ANOVA can be used to study the impact of various social factors, such as ethnicity, gender, or geographic location, on a wide range of outcomes, including health, employment, and civic engagement.

The results of these analyses can inform social policies and interventions aimed at addressing social inequalities and promoting social justice.

Between-Subjects Designs and ANOVA

A key aspect of applying ANOVA in these real-world scenarios is the use of between-subjects designs. In a between-subjects design, each participant is assigned to only one experimental group. This contrasts with within-subjects designs where the same participants are exposed to all conditions.

The hypertension drug trial, the teaching method evaluation, the advertising campaign assessment, and the socioeconomic status study are all examples of between-subjects designs.

In each case, participants are randomly assigned to one group, and their outcomes are compared using ANOVA. Random assignment is crucial in between-subjects designs to ensure that any observed differences between groups are due to the experimental manipulation and not pre-existing differences between participants.

The ANOVA test then provides a framework for rigorously analyzing the data collected from these between-subjects designs, allowing researchers and practitioners to draw meaningful conclusions about the effects of different interventions, treatments, or social factors.

Frequently Asked Questions: Understanding Variance Between Groups

Here are some common questions about variance between groups and how it relates to statistical analysis.

What exactly does "variance between groups" measure?

Variance between groups quantifies the differences in means across different groups or populations. It reflects how much the average value of each group deviates from the overall average of all the groups combined. A higher variance between groups indicates more substantial differences between the group means.

How is variance between groups related to ANOVA?

Analysis of Variance (ANOVA) uses the concept of variance between groups to determine if there’s a statistically significant difference among the means of three or more groups. It compares the variance between groups to the variance within groups. If the variance between groups is significantly larger, it suggests a real difference exists between the group means.

Is a high variance between groups always desirable?

Not necessarily. While a high variance between groups indicates distinct differences between groups, it doesn’t automatically mean the differences are meaningful or important. The context of the study and the specific research question determine whether a high or low variance between groups is expected or desirable.

What factors can influence the variance between groups?

Several factors can influence the variance between groups, including the true differences between the groups, the sample size of each group, and the amount of variability within each group. Larger sample sizes and less within-group variability generally make it easier to detect significant variance between groups.

So, there you have it – a crash course on variance between groups. Hope you found this helpful! Now, go out there and start analyzing those datasets like a pro!