Datagrams, a fundamental unit of data transmission, form the backbone of UDP connectionless protocol. This protocol, in contrast to TCP, prioritizes speed over guaranteed delivery, making it suitable for applications where latency is critical. Network congestion, although a potential issue, is often mitigated by intelligent application design. Furthermore, DNS servers frequently rely on UDP’s efficient communication model, demonstrating its crucial role in resolving domain names with the udp connectionless protocol.

In the intricate world of network communication, protocols serve as the foundational languages enabling seamless data exchange between devices. They establish the rules and standards that govern how information is formatted, transmitted, and interpreted across networks. Understanding these protocols is crucial for anyone seeking to grasp the intricacies of modern networking.

The Importance of Network Protocols

Network protocols are the unsung heroes of the digital age. They ensure that different devices, regardless of their hardware or software configurations, can effectively communicate.

Without these standardized rules, chaos would reign, and the internet as we know it would be impossible.

From simple web browsing to complex data transfers, protocols are at work behind the scenes, ensuring everything runs smoothly.

UDP: A Key Player in the TCP/IP Suite

Within the vast landscape of network protocols, the User Datagram Protocol (UDP) stands out as a pivotal component of the TCP/IP suite.

UDP provides a connectionless method for transmitting data packets. It’s often favored in scenarios where speed and efficiency are paramount.

Its simplicity and low overhead make it an ideal choice for specific applications that can tolerate some degree of data loss.

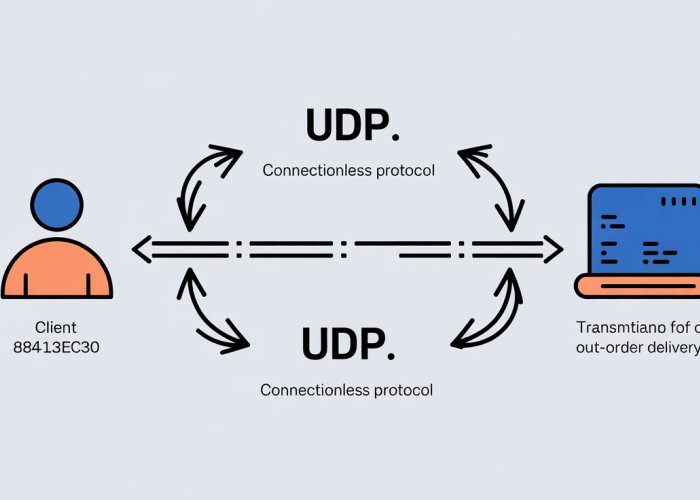

Connectionless Communication: UDP’s Core

The defining characteristic of UDP is its connectionless nature. Unlike its counterpart, TCP (Transmission Control Protocol), UDP does not establish a dedicated connection before transmitting data.

Instead, it simply sends packets of information (datagrams) to the destination without prior negotiation or acknowledgment.

This approach drastically reduces overhead and latency. It enables faster data transfer, particularly in situations where real-time performance is critical.

Contrasting with Connection-Oriented Communication (TCP)

TCP, on the other hand, employs a connection-oriented approach. Before any data is exchanged, TCP establishes a reliable connection between the sender and receiver through a process known as the "three-way handshake."

This handshake ensures that both devices are ready to communicate and agree on parameters for data transmission.

While TCP guarantees reliable delivery and ordered data transfer, this comes at the cost of increased overhead and latency compared to UDP. The choice between UDP and TCP often depends on the specific requirements of the application.

UDP in the TCP/IP Protocol Suite

UDP resides within the transport layer of the TCP/IP protocol suite, alongside TCP. This layer is responsible for providing end-to-end communication between applications.

While TCP offers reliable, ordered delivery of data, UDP provides a faster, more lightweight alternative.

By understanding UDP’s place within this framework, we can better appreciate its role in supporting a wide range of network applications and services.

In scenarios where speed is of the essence, and minor data loss is tolerable, connectionless communication offers a compelling alternative. But what exactly defines this approach, and how does it differ from the more common connection-oriented methods?

Delving into Connectionless Communication: UDP’s Foundation

At the heart of UDP’s functionality lies its connectionless nature. This approach fundamentally changes how data is transmitted compared to connection-oriented protocols. Understanding this distinction is key to appreciating UDP’s role and advantages in network communication.

Defining Connectionless Communication

Connectionless communication, as the name suggests, does not require a pre-established connection between the sender and receiver before data transmission.

This means that data packets, or datagrams, are sent independently, without any prior negotiation or agreement on transmission parameters.

Each datagram contains the destination address and is routed individually through the network.

Absence of Formal Connection Establishment and Teardown

One of the defining characteristics of connectionless communication is the absence of a formal connection establishment phase. Unlike connection-oriented protocols, there is no "handshake" or negotiation process to initiate a session.

Similarly, there is no explicit "teardown" or closure of a connection. Once the data is sent, the sender simply forgets about it, without any guarantee of delivery or acknowledgement. This greatly reduces overhead.

This lack of connection management simplifies the communication process and reduces the overhead associated with establishing and maintaining connections.

Connection-Oriented Communication (TCP) Contrasted

To fully appreciate the nature of connectionless communication, it’s essential to contrast it with its counterpart: connection-oriented communication, exemplified by TCP (Transmission Control Protocol).

Unlike UDP, TCP establishes a dedicated connection between the sender and receiver before any data is transmitted.

The TCP Three-Way Handshake

This connection is established through a process known as the three-way handshake.

- The client sends a SYN (synchronize) packet to the server.

- The server responds with a SYN-ACK (synchronize-acknowledge) packet.

- The client then sends an ACK (acknowledge) packet to confirm the connection.

This three-way handshake ensures that both the sender and receiver are ready to communicate. It also establishes initial sequence numbers for reliable data transfer.

Overhead of Connection Management

While the three-way handshake provides reliability and guaranteed delivery, it also introduces significant overhead.

The process of establishing and maintaining a connection consumes network resources and adds latency to the communication.

Furthermore, TCP requires additional mechanisms for error detection, retransmission, and flow control.

This overhead makes TCP less suitable for applications where speed and low latency are paramount.

The Role of the UDP Header

Despite its connectionless nature, UDP does include a header that provides essential information for routing and interpreting the data.

The UDP header is much simpler than the TCP header, reflecting its streamlined approach to data transmission.

The UDP header typically contains the following fields:

- Source Port: The port number of the sending application.

- Destination Port: The port number of the receiving application.

- Length: The length of the UDP datagram (header + data).

- Checksum: An optional checksum used for error detection.

The destination port is crucial for directing the data to the correct application on the receiving end.

The checksum, though optional, provides a basic level of data integrity verification. This verifies the data was not corrupted during transmission.

By examining the UDP header, the receiving device can properly interpret the data and ensure that it reaches the intended application.

In scenarios where speed is of the essence, and minor data loss is tolerable, connectionless communication offers a compelling alternative. But what exactly defines this approach, and how does it differ from the more common connection-oriented methods?

The Advantages of UDP: Speed, Efficiency, Simplicity

Having examined the fundamentals of UDP and its connectionless architecture, it’s time to assess the advantages that this design philosophy brings to the table. UDP’s strengths lie in its speed, efficiency, and relative simplicity, making it a compelling choice for specific applications where these factors outweigh the need for guaranteed delivery.

Speed and Efficiency: Minimizing Overhead

The most prominent advantage of UDP is its speed.

This stems directly from its connectionless nature.

Unlike TCP, UDP does not require the overhead of establishing and maintaining a connection.

There is no three-way handshake, no connection state to track, and no explicit connection termination process.

This lack of overhead translates to faster data transfer, as the protocol can immediately begin transmitting data without any preliminary negotiation.

For time-sensitive applications, this reduced overhead can make a significant difference.

The Impact of Connection Overhead

The absence of connection management translates to greater bandwidth efficiency.

Every packet sent carries only the necessary data and a minimal UDP header.

This minimizes the overhead associated with each transmission, allowing for more data to be transmitted within the same bandwidth allocation.

In environments with limited bandwidth, this efficiency gain can be crucial.

Scenarios Where Speed Reigns Supreme

UDP’s speed and efficiency make it particularly well-suited for scenarios where speed is paramount.

This includes applications such as streaming media, online gaming, and real-time communication.

In these cases, the occasional dropped packet is often less detrimental than the delay introduced by connection-oriented protocols.

For example, in a fast-paced online game, a dropped packet might result in a momentary visual glitch, which is preferable to a sudden lag spike caused by TCP’s retransmission mechanisms.

Low Latency: The Key to Responsiveness

Closely related to speed is the concept of latency, which refers to the delay between sending and receiving data.

UDP inherently offers lower latency compared to TCP due to its lack of connection establishment and retransmission mechanisms.

This is a critical advantage for applications requiring near-instantaneous responses.

Real-Time Applications and Low Latency

The low latency offered by UDP makes it ideally suited for real-time applications like voice and video conferencing.

Even small delays can significantly degrade the user experience, making UDP the preferred choice despite the possibility of occasional packet loss.

The trade-off between reliability and speed is often worthwhile in these scenarios.

Simplicity: Streamlining Development

Beyond speed and efficiency, UDP also benefits from its relative simplicity.

The protocol itself is significantly less complex than TCP, making it easier to implement and debug.

This reduced complexity can lead to faster development cycles and lower development costs.

A Simplified Protocol Stack

The streamlined nature of UDP translates to a simpler protocol stack.

Developers have greater control over the transmission process, allowing them to implement custom reliability mechanisms or tailor the protocol to specific application requirements.

This flexibility can be a significant advantage for developers working on specialized applications.

Having established the fundamentals of UDP and its inherent advantages, it’s beneficial to examine real-world scenarios where its unique characteristics shine. From the immediacy of voice and video calls to the responsiveness of online gaming, UDP’s impact is pervasive. Let’s delve into specific applications where its speed and efficiency make it the protocol of choice.

Use Cases for UDP: Where Speed Matters Most

UDP’s design makes it exceptionally well-suited for applications prioritizing speed and real-time data transmission over guaranteed delivery. While TCP ensures every packet arrives correctly and in order, this comes at the cost of added overhead and latency. UDP embraces a "best-effort" approach, making it ideal where timely data is more critical than 100% reliability.

Real-Time Applications: The Need for Speed

In real-time communication like voice over IP (VoIP) and video conferencing, even small delays can significantly degrade the user experience. A lag of even a fraction of a second can disrupt conversations and make interactions feel unnatural.

UDP is often favored because its low overhead allows for faster transmission of audio and video data. While some packets may be lost during transmission, the human brain can often compensate for these minor gaps, especially in rapidly changing streams.

The trade-off is accepting occasional glitches in favor of maintaining a smoother, more responsive connection overall. Error correction and retransmission mechanisms, if required, are typically handled at the application layer.

Streaming Media: Balancing Quality and Timeliness

Streaming services like YouTube and Twitch frequently use UDP, or protocols built upon it, for live broadcasts. While content delivery networks (CDNs) often employ TCP for pre-recorded content due to its reliability, live streams benefit from UDP’s reduced latency.

In these scenarios, a slight loss of data is often preferable to buffering or freezing. Viewers are more likely to tolerate occasional pixelation or dropped frames than a complete interruption of the stream.

Techniques like forward error correction (FEC) can be implemented to mitigate the impact of packet loss, adding redundancy to the data stream so that missing packets can be reconstructed.

Online Gaming: Responsiveness is Key

Online gaming demands low latency to ensure a responsive and immersive experience. Actions need to be registered and reflected in the game world as quickly as possible.

Any delay can lead to a frustrating experience for players. UDP is frequently used to transmit player position, actions, and other game-related data.

While some packet loss is inevitable, especially in fast-paced games, the impact is usually less significant than the delay that would be introduced by TCP’s error correction mechanisms. Game developers often implement techniques to smooth over lost packets, such as interpolation, to minimize visual glitches.

DNS (Domain Name System): Fast Lookups

The Domain Name System (DNS) translates human-readable domain names (like "example.com") into IP addresses that computers use to locate servers on the internet. Speed is paramount in DNS lookups, as every web request relies on this translation process.

Most DNS queries are small and can be easily transmitted within a single UDP packet. This makes UDP an ideal choice for its speed and efficiency.

While TCP is used for larger DNS responses or when a DNS server needs to transfer zone data to another, UDP is the primary transport protocol for standard DNS queries. The speed of UDP allows for faster web browsing and application performance.

Having explored UDP’s strengths in scenarios demanding speed, it’s crucial to acknowledge its inherent weaknesses. The "best-effort" delivery model, while enabling rapid data transmission, comes with trade-offs in reliability. Understanding these limitations and how to address them is essential for effectively utilizing UDP in various applications.

Challenges and Considerations: Addressing UDP’s Drawbacks

UDP’s design prioritizes speed and efficiency, but this comes at the cost of guaranteed delivery and order. This section will delve into these challenges, exploring strategies to mitigate them while emphasizing the crucial role of the Checksum in maintaining data integrity.

The Inevitable: Packet Loss

Unlike TCP, UDP operates on a connectionless model, meaning there’s no formal handshake to establish a connection or guarantee that every packet arrives at its destination. This inherent characteristic implies that packet loss is a distinct possibility when using UDP.

UDP does not implement any mechanisms to retransmit lost packets, nor does it ensure that packets arrive in the order they were sent.

This is the primary trade-off for UDP’s speed advantage. In situations where network congestion is high or the connection is unreliable, packet loss can become a significant issue.

Strategies for Handling Packet Loss

While UDP itself doesn’t provide built-in error correction, there are strategies developers can implement at the application layer to address packet loss:

- Forward Error Correction (FEC): Including redundant information with each packet allows the receiver to reconstruct lost data without requesting retransmission.

- Acknowledgement and Retransmission: Implementing a custom acknowledgement system at the application level, where the receiver explicitly acknowledges received packets and the sender retransmits any unacknowledged packets after a timeout.

- Rate Limiting and Congestion Control: Adjusting the sending rate to avoid overwhelming the network and increasing the likelihood of packet loss.

Application-Layer Reliability: Taking Responsibility

Because UDP lacks built-in reliability mechanisms, the onus of ensuring reliable data transfer falls squarely on the application developer. This means designing the application to detect and handle packet loss, reordering, and duplication.

This can involve implementing custom protocols on top of UDP, adding complexity to the development process. However, it also provides greater flexibility to tailor the reliability mechanisms to the specific needs of the application.

The key consideration is to analyze the application’s requirements and determine the appropriate level of reliability needed. For some applications, occasional packet loss may be tolerable, while others may require near-perfect reliability.

The Checksum: Ensuring Data Integrity

Even though UDP does not guarantee delivery, it does provide a basic mechanism for ensuring data integrity: the Checksum. The Checksum is a calculated value that is included in the UDP header.

This value is calculated based on the data being transmitted.

The receiver recalculates the Checksum upon receiving the packet and compares it to the value in the header. If the two values don’t match, it indicates that the data has been corrupted during transmission.

While the Checksum doesn’t prevent data corruption, it allows the receiver to detect it and discard the corrupted packet. This is crucial for maintaining data integrity, even if delivery is not guaranteed.

However, it’s important to note that the UDP Checksum is not foolproof and may not detect all types of errors. For applications requiring higher levels of data integrity, additional error detection and correction mechanisms may be necessary.

Having acknowledged the challenges posed by UDP’s inherent lack of reliability and explored strategies to compensate for packet loss, it’s time to place UDP in context. To truly grasp UDP’s role, it’s essential to compare it directly with its more widely known counterpart: the Transmission Control Protocol (TCP). By examining the fundamental differences in their design, performance characteristics, and ideal applications, a clearer understanding of each protocol’s strengths and weaknesses emerges.

UDP vs TCP: A Detailed Comparison of Protocols

UDP and TCP represent fundamentally different approaches to data transmission. Understanding the trade-offs between them is key to choosing the right protocol for a given application. TCP prioritizes reliability, while UDP prioritizes speed. This difference stems from their core design philosophies and has profound implications for their performance and suitability in various scenarios.

Reliability vs. Speed: The Fundamental Trade-off

TCP offers reliable, ordered data transfer. It achieves this through connection establishment (the three-way handshake), packet sequencing, error detection, and retransmission mechanisms. If a packet is lost or corrupted, TCP ensures it is retransmitted until it arrives correctly. This guarantee comes at a cost: increased overhead and latency.

UDP, on the other hand, sacrifices reliability for speed. It forgoes connection establishment, sequencing, and error recovery. Packets are simply sent without any guarantee of delivery or order. While this makes UDP susceptible to packet loss and out-of-order delivery, it also significantly reduces overhead and latency.

The choice between TCP and UDP, therefore, depends on the application’s requirements. If data integrity is paramount (e.g., file transfer, web browsing), TCP is the clear choice. If speed and low latency are critical (e.g., online gaming, real-time video streaming), UDP may be more appropriate, provided that the application can tolerate some data loss.

Overhead: The Cost of Reliability

The overhead associated with a protocol refers to the additional data transmitted beyond the actual payload. This includes headers, control packets, and acknowledgements.

TCP’s reliability mechanisms introduce significant overhead. The three-way handshake adds connection establishment time, while acknowledgements and retransmissions consume bandwidth. The TCP header itself is larger than the UDP header, containing fields for sequence numbers, acknowledgement numbers, and control flags.

UDP’s minimalist design results in significantly less overhead. The UDP header is small, containing only source and destination ports, length, and checksum. The absence of connection management and error recovery mechanisms further reduces overhead, making UDP more efficient for transmitting small amounts of data or when bandwidth is limited.

However, it’s important to note that while UDP has lower protocol overhead, the application layer may need to implement its own reliability mechanisms, which can add to the overall overhead.

Use Cases: Matching Protocols to Applications

The contrasting characteristics of TCP and UDP make them suitable for different types of applications.

TCP is ideal for applications that require reliable data transfer, such as:

- Web browsing (HTTP/HTTPS)

- Email (SMTP, POP3, IMAP)

- File transfer (FTP, SFTP)

- Remote access (SSH, Telnet)

UDP is preferred for applications that prioritize speed and low latency, even if it means tolerating some data loss, such as:

- Online gaming

- Real-time video streaming

- Voice over IP (VoIP)

- DNS lookups

- Network monitoring

In essence, TCP is the workhorse for reliable data transfer, while UDP is the sprinter for time-sensitive applications. The selection between them hinges on a careful consideration of the specific needs of the application and the acceptable trade-offs between reliability and speed.

Having navigated the nuanced landscape of TCP and UDP, comparing their reliability, speed, and suitability for diverse applications, the conversation naturally shifts to a critical aspect often overshadowed in discussions of UDP: security. UDP’s inherent design characteristics, while advantageous in certain contexts, also introduce unique security challenges that developers and network administrators must address.

Security Considerations: Protecting UDP-Based Applications

UDP, by its very nature, presents a different security profile compared to connection-oriented protocols like TCP. Its connectionless nature, while contributing to its speed and efficiency, also opens doors to specific vulnerabilities that must be carefully considered and mitigated. Understanding these vulnerabilities is the first step in securing UDP-based applications.

Inherent Vulnerabilities of UDP

Several factors contribute to the potential security risks associated with UDP. These risks stem largely from its stateless nature and the absence of built-in mechanisms for authentication and session management.

Lack of Connection Establishment

Unlike TCP, UDP doesn’t require a handshake to establish a connection. This makes it susceptible to attacks where malicious actors can send packets to a target without needing prior authorization.

This absence of connection tracking allows for easier spoofing and amplification attacks.

IP Address Spoofing

It’s relatively easy to spoof the source IP address in UDP packets. This can be used to launch Distributed Denial-of-Service (DDoS) attacks, where attackers flood a target with traffic from numerous spoofed addresses, making it difficult to identify and block the source of the attack.

UDP’s stateless nature makes source IP address verification challenging.

Amplification Attacks

UDP protocols, such as DNS, can be exploited for amplification attacks. Attackers send small queries to a vulnerable server with a spoofed source IP address of the intended victim. The server then responds with a much larger response to the victim, amplifying the attack traffic.

DNS amplification attacks leveraging UDP are a common and potent threat.

Lack of Built-in Security Mechanisms

UDP itself does not provide any built-in security mechanisms like encryption or authentication. This means that if security is required, it must be implemented at the application layer, adding complexity to the development process.

Security becomes the sole responsibility of the application developer.

Best Practices for Securing UDP Applications

Despite these challenges, UDP applications can be secured by implementing appropriate security measures at the application and network layers. A layered approach, combining multiple security techniques, is often the most effective strategy.

Input Validation and Sanitization

Always validate and sanitize all input received from UDP packets. This helps to prevent injection attacks and other forms of data manipulation. Ensure that the data conforms to expected formats and lengths.

Rate Limiting

Implement rate limiting to restrict the number of UDP packets that can be sent from a particular source within a given timeframe. This can help to mitigate DDoS attacks and other forms of traffic flooding.

Rate limiting provides a crucial defense against volumetric attacks.

Authentication and Authorization

Even though UDP lacks built-in authentication, application-level authentication mechanisms can be implemented. This might involve using shared secrets, digital signatures, or other methods to verify the identity of the sender.

Application-level authentication adds a layer of trust to UDP communications.

Encryption

Encrypting UDP payloads can protect sensitive data from eavesdropping. This can be achieved using protocols like DTLS (Datagram Transport Layer Security), which is specifically designed for securing UDP-based communications.

Encryption ensures confidentiality, even if packets are intercepted.

Firewalls and Access Control Lists (ACLs)

Configure firewalls and ACLs to restrict access to UDP ports and services. Only allow traffic from trusted sources and block any suspicious or unauthorized connections.

Firewalls act as gatekeepers, filtering malicious UDP traffic.

Monitoring and Logging

Implement robust monitoring and logging to detect and respond to security incidents. Monitor UDP traffic for unusual patterns, such as sudden spikes in traffic volume or connections from unexpected sources.

Proactive monitoring enables rapid detection and response to threats.

Regular Security Audits and Penetration Testing

Conduct regular security audits and penetration testing to identify and address vulnerabilities in UDP-based applications. This helps to ensure that security measures are effective and up-to-date.

Periodic assessments uncover weaknesses before they can be exploited.

By understanding the inherent vulnerabilities of UDP and implementing these best practices, developers and network administrators can significantly enhance the security of UDP-based applications and protect them from a wide range of threats.

FAQs About UDP Connectionless Protocol

This FAQ section addresses common questions about the UDP connectionless protocol and its faster transfer speeds. We hope this helps clarify any uncertainties you may have.

What makes UDP connectionless?

Unlike TCP, UDP does not establish a dedicated connection before sending data. Each UDP packet is sent independently, without prior handshaking. This "fire and forget" approach is a core characteristic of the UDP connectionless protocol.

Why is the UDP connectionless protocol faster than TCP?

The absence of connection establishment and error checking overhead significantly speeds up UDP transfers. TCP requires a three-way handshake and retransmits lost packets, which adds latency. UDP prioritizes speed over reliability, making the UDP connectionless protocol suitable for applications where some data loss is acceptable.

What are the downsides of using a UDP connectionless protocol?

The main disadvantage is the lack of guaranteed delivery. Packets can be lost, arrive out of order, or be duplicated. Applications using the UDP connectionless protocol must implement their own mechanisms for error handling, if reliability is critical.

When is UDP a better choice than TCP?

UDP is generally preferred for applications like online gaming, video streaming, and DNS lookups, where low latency is paramount, and minor data loss is tolerable. The speed advantage of the UDP connectionless protocol outweighs the reliability concerns in these scenarios.

Hopefully, you now have a better grasp of how the udp connectionless protocol gets data moving quickly! Go forth and build amazing things!