The elegant curve of an ellipse finds a powerful ally in parametric equations, offering a flexible method for its mathematical description. Desmos, a popular graphing calculator tool, greatly simplifies visualizing this relationship, showcasing the parametric equation ellipse in action. This nuanced understanding is crucial for engineers optimizing satellite orbits, and mathematicians expanding on the works of the famous scientist Johannes Kepler, who provided fundamental insights into the shapes of planetary paths, which are elliptical. This exploration unveils the core concepts behind parametric equation ellipse, providing the necessary tools to unlock the potential of this mathematical representation.

Python, with its extensive ecosystem of packages, is a powerful language. But this strength can become a source of complexity when managing project dependencies. Imagine working on several Python projects simultaneously, each requiring different versions of the same library. Without a proper isolation mechanism, conflicts are inevitable. This is where virtual environments step in, offering a solution to dependency chaos and ensuring a smooth development experience.

What is a Python Virtual Environment?

A virtual environment is essentially a self-contained directory that holds a specific Python interpreter and its associated packages. Think of it as a sandbox, isolated from your system’s global Python installation. When you activate a virtual environment, your system temporarily prioritizes the Python interpreter and packages within that environment. This allows you to install, upgrade, and remove packages without affecting other projects or the system’s core Python installation.

The Dependency Management Dilemma: Why Virtual Environments Matter

Without virtual environments, installing packages globally can quickly lead to conflicts. Project A might require version 1.0 of a library, while Project B needs version 2.0. Installing both globally would inevitably break one project or the other. Virtual environments solve this problem by providing project-specific package repositories.

Virtual environments address two primary concerns: dependency management and project isolation.

Dependency management ensures that each project has the exact versions of the packages it needs to function correctly. Project isolation prevents conflicts between different projects by creating separate environments for each.

Benefits of Embracing Virtual Environments

The advantages of using virtual environments are numerous and contribute significantly to a cleaner, more organized, and reproducible development workflow.

Avoiding System-Wide Package Conflicts

As previously mentioned, global package installations can create conflicts. Virtual environments eliminate this risk by isolating dependencies. Each project gets its own dedicated space, preventing version clashes and ensuring that projects don’t interfere with each other.

Reproducibility of Projects

Reproducibility is crucial for collaboration and deployment. A virtual environment, along with a requirements.txt file (which we’ll discuss later), allows you to recreate the exact environment needed for a project to run correctly. This ensures that anyone can easily set up the project on their machine with the correct dependencies, regardless of their system configuration.

Maintaining a Clean and Organized Development Environment

Using virtual environments promotes a cleaner and more organized development environment. It prevents your system’s global Python installation from becoming cluttered with packages that are only needed for specific projects. This makes it easier to manage your Python installations and reduces the risk of unexpected errors caused by conflicting dependencies.

A Glimpse at venv and Other Tools

The Python standard library includes the venv module, which is a lightweight and readily available tool for creating virtual environments. It’s the recommended approach for most Python projects.

The basic usage involves using the command line to create the environment (shown later).

Beyond venv, alternative tools like conda and poetry offer additional features and benefits, such as more advanced dependency resolution and environment management capabilities. However, venv is often sufficient for many projects and provides a solid foundation for understanding virtual environments.

Without the isolation and organization that virtual environments provide, you’re essentially building on a foundation of shifting sands. Luckily, Python provides a straightforward way to create these isolated spaces, ensuring a more predictable and manageable development process. Let’s walk through setting up your first virtual environment using the built-in venv module.

Setting Up: Creating Your First Virtual Environment

The venv module, included with Python 3.3 and later, is the standard tool for creating virtual environments. It’s simple to use and integrates well with Python’s ecosystem. Let’s explore how to leverage it for your projects.

The python -m venv Command

The core command for creating a virtual environment is:

python -m venv <environment

_name>

Let’s break this down:

-

python: This invokes your Python interpreter. Ensure the correct version of Python is being used if you have multiple versions installed. -

-m venv: This tells Python to run thevenvmodule as a script. -

<environment_name>: This is the name you choose for your virtual environment directory. Choose a descriptive name.

Naming Conventions and Examples

While you can technically name your virtual environment anything, certain conventions are widely followed. Here are some examples:

-

myenv: A simple and common name. -

venv: Short and to the point. This is frequently used. -

.venv: A hidden directory, often preferred to keep your project root clean. The leading dot hides the directory by default in many file explorers.

For example, to create a virtual environment named "myprojectenv" you would run:

python -m venv myprojectenv

Anatomy of a Virtual Environment

After running the creation command, a new directory (named according to your chosen <environment_name>) will be created. Let’s peek inside and understand its structure:

-

pyvenv.cfg: This crucial file contains configuration settings for the environment, including the path to the Python interpreter it uses. Don’t manually edit this file unless you know what you’re doing. -

Scripts(Windows) orbin(macOS/Linux): This directory holds the activation scripts and other executables necessary to activate and use the environment. The activation scripts are platform-specific. -

Lib/site-packages: This is where all the packages you install within the virtual environment will reside. This directory isolates your project’s dependencies from the global Python installation. This is the heart of the environment’s isolation.

Choosing the Right Location

Where you place your virtual environment matters. Here are some considerations:

-

Within the project directory: A common practice is to create the virtual environment directly within your project’s root directory (e.g.,

myproject/.venv). This keeps everything self-contained and makes it easy to distribute the project. -

Outside the project directory: You can also create a central directory for all your virtual environments. This can be useful if you have many small projects or prefer a more organized file system. However, remember to keep track of which environment belongs to which project.

-

Avoid system directories: Never create virtual environments in system-protected directories or within the Python installation directory itself. Doing so could lead to permission issues and conflicts.

Without the isolation and organization that virtual environments provide, you’re essentially building on a foundation of shifting sands. Luckily, Python provides a straightforward way to create these isolated spaces, ensuring a more predictable and manageable development process. Let’s walk through setting up your first virtual environment using the built-in venv module.

Activation Station: Stepping into Your Virtual World

Creating a virtual environment is only half the battle. To truly harness its power, you need to activate it. Activation is the process that configures your shell to use the virtual environment’s Python interpreter and installed packages. Think of it as stepping through a portal into a self-contained Python universe.

Activating on Different Operating Systems: A Platform-Specific Guide

The activation process differs slightly depending on your operating system. Let’s explore the specific commands for Windows, macOS, and Linux.

Windows Activation

On Windows, activation is typically achieved via the command prompt (cmd.exe). Navigate to your virtual environment directory and execute the following command:

.\<environment

_name>\Scripts\activate

Replace <environment_name> with the actual name of your environment. For example, if your environment is named "myprojectenv", the command would be:

.\myprojectenv\Scripts\activate

If you are using PowerShell on Windows, the activation command is different:

.\<environment_name>\Scripts\Activate.ps1

macOS and Linux Activation

For macOS and Linux users, the process is similar. Open your terminal, navigate to the virtual environment directory, and run this command:

source <environment_name>/bin/activate

Again, replace <environment_name> with the name of your virtual environment. So, for an environment named "myprojectenv", you’d use:

source myprojectenv/bin/activate

The source command (also written as .) executes the activation script within your current shell.

Identifying an Active Environment: The Shell’s Tell-Tale Sign

How do you know if your virtual environment is active? The most obvious sign is a change in your shell prompt. Typically, the name of the virtual environment will appear in parentheses at the beginning of the prompt:

(myprojectenv) C:\path\to\your\project>

This visual cue confirms that your shell is now operating within the confines of the virtual environment.

Behind the Scenes: What Activation Actually Does

Activation isn’t magic, it’s configuration. The activation script modifies your shell’s PATH environment variable. This is key.

The PATH variable tells your operating system where to look for executable files (like python or pip).

Activation prepends the virtual environment’s Scripts (Windows) or bin (macOS/Linux) directory to the PATH. This ensures that when you type python, you’re using the virtual environment’s Python interpreter, not the system-wide one.

This redirection is crucial for isolating your project’s dependencies.

Troubleshooting Activation Issues: Common Problems and Solutions

Sometimes, activation doesn’t go as planned. Here are a few common issues and how to address them:

-

"Activate.ps1 is not digitally signed" (PowerShell): PowerShell’s execution policy might be preventing the script from running. You can temporarily bypass this with

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser. However, understand the security implications before changing your execution policy. -

"Command not found" or similar errors: Ensure you’re in the correct directory and have typed the activation command correctly. Double-check the path to the

activatescript. -

Incorrect Python version: If you have multiple Python versions installed, the activated environment might be using the wrong one. Verify that the desired Python version is associated with the virtual environment. Consider using

python3instead ofpythonif you’re targeting Python 3 specifically.

By understanding the activation process and being prepared to troubleshoot common issues, you can confidently step into your virtual environment and begin developing in a clean, isolated, and reproducible environment.

Package Power: Installing Packages Within the Environment

Now that you’ve successfully activated your virtual environment, you’re standing on the precipice of true project isolation. It’s time to populate that sterile environment with the specific tools and libraries your project demands. This is where pip, the Python package installer, truly shines.

Unleashing pip Within Your Virtual Fortress

pip is the command-line tool that interfaces with the Python Package Index (PyPI), a vast repository of open-source Python packages.

Within your activated virtual environment, pip knows to install packages only within that environment, leaving your system-wide Python installation untouched. This is the core principle that prevents dependency conflicts and ensures project reproducibility.

To install a package, simply use the command: pip install <package

_name>. For example, to install the popular requests library for making HTTP requests, you would type pip install requests and press Enter. pip will then download the latest version of requests and install it, along with any dependencies, directly into your virtual environment’s site-packages directory.

Specifying Versions: A Key to Reproducibility

In the ever-evolving world of software, packages are constantly being updated. While updates often bring improvements and bug fixes, they can also introduce breaking changes that can wreak havoc on your project.

To mitigate this risk and ensure that your project will always run as expected, it’s crucial to specify the exact versions of the packages you’re using.

You can install a specific version of a package using the == operator: pip install <package_name>==<version>. For example, to install version 2.26.0 of the requests library, you would use pip install requests==2.26.0. This guarantees that your project will always use that particular version, regardless of any subsequent updates.

Keeping Up-to-Date: The Upgrade Command

While pinning package versions is essential for stability, there may be times when you need to upgrade to a newer version. This might be to take advantage of new features, bug fixes, or security patches.

To upgrade a package to the latest available version, use the command: pip install --upgrade <package_name>. For instance, pip install --upgrade requests will upgrade the requests library to the newest version compatible with your Python environment.

However, before upgrading, it’s always a good idea to thoroughly test your project to ensure that the upgrade doesn’t introduce any compatibility issues.

The requirements.txt File: Your Project’s Dependency Blueprint

As your project grows, the number of packages it depends on will likely increase. Manually tracking and installing each package and its specific version can become cumbersome and error-prone. This is where the requirements.txt file comes in handy.

The requirements.txt file is a simple text file that lists all of your project’s dependencies, along with their versions. It serves as a blueprint for recreating your project’s environment on any machine.

Generating the requirements.txt

Creating a requirements.txt file is a breeze. Simply activate your virtual environment and run the command: pip freeze > requirements.txt.

This command tells pip to list all the installed packages and their versions and then redirect the output to a file named requirements.txt.

This file can then be shared with other developers or used to deploy your project to a production environment.

Installing from requirements.txt

To install the packages listed in a requirements.txt file, use the command: pip install -r requirements.txt. This command tells pip to read the requirements.txt file and install all the packages listed within it, along with their specified versions.

This ensures that everyone working on the project is using the same set of dependencies, eliminating potential compatibility issues and making collaboration much smoother.

Using a requirements.txt file is a cornerstone of reproducible Python projects. It allows you to easily recreate your development environment, share your project with others, and deploy your project to production with confidence.

Deactivation Dynamics: Gracefully Exiting Your Virtual Environment

Now that you’ve harnessed the power of your virtual environment and installed all the necessary packages, it’s equally important to understand how to properly exit it. Just as entering the virtual environment isolates your project, deactivating it neatly returns your system to its original state.

Think of it as closing the door behind you, ensuring that your project’s dependencies don’t inadvertently interfere with other parts of your system. This is not just good practice, but essential for maintaining a clean and predictable development workflow.

The deactivate Command: Your Exit Strategy

Deactivating your virtual environment is surprisingly simple. Regardless of your operating system, the command is universally the same: deactivate.

Just type deactivate into your command line and press Enter. The familiar parenthesis containing your environment’s name will disappear from your shell prompt, signaling that you’ve successfully exited the virtual environment.

This single command effectively reverses the changes made during activation, restoring your system’s default Python environment.

The Mechanics of Deactivation: Reverting the Path

Understanding what happens behind the scenes during deactivation provides valuable insight into the process. When you activate a virtual environment, the activation script modifies your shell’s PATH environment variable.

This modification prepends the virtual environment’s bin (or Scripts on Windows) directory to the PATH, ensuring that when you type python or pip, you’re using the versions within the virtual environment, not the system-wide versions.

Deactivation reverses this process, removing the virtual environment’s directory from the PATH.

This ensures that subsequent commands use the system’s default Python installation and packages, effectively isolating your project’s dependencies when the environment is not active.

Why Deactivation Matters: Cleanliness and Consistency

Deactivating your virtual environment might seem like a minor detail, but it’s a crucial step in maintaining a well-organized development environment.

Failing to deactivate can lead to unexpected behavior if you accidentally run commands intended for a specific project within the wrong environment.

By deactivating after you’re finished working on a project, you prevent potential conflicts and ensure that your system remains clean and predictable. This simple habit significantly contributes to long-term project maintainability and reduces the risk of introducing subtle bugs.

Best Practices: Deactivate as the Last Step

Make it a habit to deactivate your virtual environment as the last step when you finish working on a project. Before closing your terminal window or switching to a different project, type deactivate to ensure a clean break.

This small act can save you from future headaches and maintain the integrity of your development environment. Consistent deactivation demonstrates diligence and helps avoid accidental modifications to the wrong environment.

Beyond the Basics: Mastering Advanced Virtual Environment Techniques

With a solid grasp of the fundamentals – creation, activation, package management, and deactivation – you’re well-equipped to leverage virtual environments for basic Python projects. However, as your projects grow in complexity and scope, you’ll inevitably encounter scenarios that demand a deeper understanding and more sophisticated techniques.

This section explores several advanced aspects of virtual environments, designed to elevate your workflow from functional to truly efficient and robust. From juggling multiple environments to harnessing the power of environment variables and exploring alternative tools, we’ll venture beyond the basics to equip you with the knowledge to tackle even the most challenging development scenarios.

Managing Multiple Virtual Environments: A Project Portfolio Approach

As a developer, you’re likely working on multiple projects simultaneously, each with its own unique set of dependencies. Creating a dedicated virtual environment for each project becomes essential for maintaining isolation and preventing conflicts.

The standard practice involves creating a separate directory for each project and housing the virtual environment within that directory, often named .venv or env.

This naming convention allows you to easily identify the associated environment and, as we’ll discuss later, conveniently exclude it from version control.

Adopting this project-centric approach ensures that each project operates within its own isolated ecosystem, eliminating the risk of dependency clashes and guaranteeing reproducibility across different machines.

Harnessing Environment Variables: Dynamic Configuration

Environment variables provide a powerful mechanism for configuring your applications without hardcoding sensitive information or environment-specific settings directly into your code.

Within a virtual environment, you can leverage environment variables to customize the behavior of your application based on the environment in which it’s running (e.g., development, testing, production).

Python’s os module provides access to environment variables through os.environ. You can set and retrieve these variables programmatically, allowing your application to adapt dynamically to different environments.

Consider using libraries like python-dotenv to simplify the management of environment variables, especially in development environments, by loading variables from a .env file. Remember never to commit .env files containing sensitive information to version control.

Expanding Your Toolkit: Conda and Poetry

While the venv module offers a solid foundation for managing virtual environments, alternative tools like Conda and Poetry provide enhanced features and cater to specific needs.

Conda: The Data Science Powerhouse

Conda is an open-source package, dependency, and environment management system. It’s particularly popular in the data science community due to its ability to manage not only Python packages but also system-level dependencies, including libraries written in C or C++.

Conda excels at creating isolated environments for projects with complex dependencies, and its support for non-Python packages makes it a versatile choice for data science workflows.

Poetry: Dependency Management Redefined

Poetry takes a more holistic approach to dependency management, focusing on project packaging and publishing in addition to environment isolation.

It uses a pyproject.toml file to declare project dependencies and manage the build process. Poetry automatically resolves dependencies, creates a virtual environment, and ensures that your project is packaged correctly for distribution.

Poetry’s dependency resolution algorithm is more sophisticated than pip’s, often leading to more stable and predictable dependency graphs. It is particularly well-suited for creating and publishing Python packages.

The choice between venv, Conda, and Poetry depends on the specific requirements of your project. venv is a great starting point for simple Python projects, while Conda is better suited for data science workflows, and Poetry excels at managing complex dependencies and packaging.

Version Control Considerations: Ignoring the Irrelevant

Virtual environment directories contain numerous files that are specific to your local machine and not relevant to the project’s codebase. Including these files in version control can lead to unnecessary bloat and potential conflicts.

To avoid this, it’s crucial to add the virtual environment directory (e.g., .venv, env) to your project’s .gitignore file. This tells Git to ignore the directory and its contents, preventing them from being tracked in the repository.

This ensures that only the essential source code and project configuration files are committed, maintaining a clean and manageable version control history.

By excluding the virtual environment from version control, you also ensure that other developers can easily recreate the environment on their own machines using the project’s requirements.txt or Poetry’s pyproject.toml file.

FAQs: Understanding Ellipses and Parametric Equations

Hopefully, this section answers some common questions about parametric equations and their relationship to ellipses.

How does using parametric equations help describe an ellipse?

Parametric equations allow us to define the x and y coordinates of a point on the ellipse independently, using a parameter (usually ‘t’). This simplifies describing the ellipse’s shape and movement along it, rather than trying to directly relate x and y with a single equation. Using a parameter to describe the parametric equation ellipse is easier to work with in many situations.

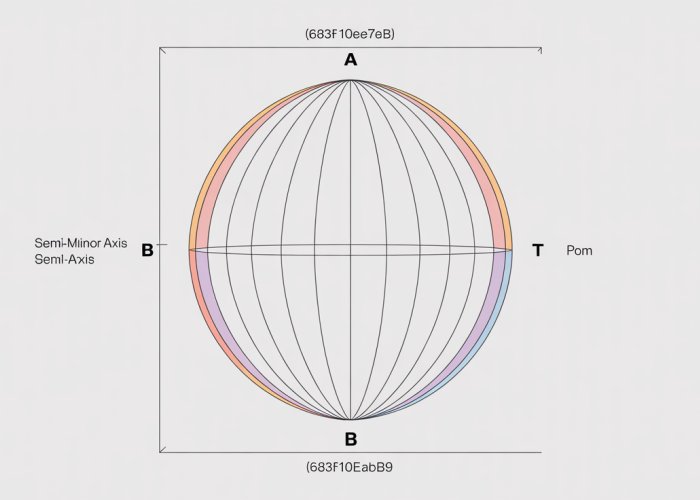

What are the standard parametric equations for an ellipse centered at the origin?

The standard parametric equations for an ellipse centered at the origin are x = acos(t) and y = bsin(t), where ‘a’ is the semi-major axis, ‘b’ is the semi-minor axis, and ‘t’ is the parameter. By plugging different values for t you generate the different points along the edge of the parametric equation ellipse.

How do I adjust the parametric equations if the ellipse is not centered at the origin?

If the ellipse is centered at (h, k), you simply add ‘h’ to the x equation and ‘k’ to the y equation. So, the equations become x = h + acos(t) and y = k + bsin(t). This shifts the entire parametric equation ellipse to the new center.

Can parametric equations represent other shapes besides ellipses?

Yes, parametric equations are a versatile tool for describing various curves and shapes. By changing the functions used for x(t) and y(t), you can represent lines, parabolas, hyperbolas, and even more complex, non-standard shapes. Understanding parametric equation ellipse behavior provides a good foundation to build from.

So, that’s the gist of parametric equation ellipse! Hopefully, things are a little clearer now. Go forth and conquer those curves!