The Java Virtual Machine (JVM) benefits significantly from java dynamic linking, which allows for classes to be loaded at runtime, enhancing application flexibility. Reflection in Java, a powerful mechanism, is intricately tied to java dynamic linking, as it enables the inspection and manipulation of classes at runtime. This runtime class manipulation is often facilitated by frameworks like Spring, which heavily utilize java dynamic linking for dependency injection and modular application design. Understanding these relationships is crucial, as it helps you supercharge performance using java dynamic linking.

Imagine a large enterprise application, creaking under the weight of its own monolithic structure. Startup times are agonizingly slow, resource consumption is exorbitant, and even minor updates require redeploying the entire system. This was the reality for many organizations until they discovered the power of Java Dynamic Linking.

But how exactly does dynamic linking solve this problem? Let’s begin with the core concept:

Defining Dynamic Linking in Java

In essence, Dynamic Linking is a mechanism where the resolution of symbolic references (like class dependencies) is deferred until runtime. Unlike static linking, where all dependencies are resolved at compile time, dynamic linking allows the Java Virtual Machine (JVM) to load classes and resources only when they are actually needed.

This on-demand loading capability forms the bedrock of its performance advantages.

Consider it like this: instead of packing everything into one massive suitcase before a trip, you only pack the essentials and then acquire other items as you need them during your journey. This analogy highlights the resourcefulness of dynamic linking.

The Performance Promise: A Thesis

This article argues that dynamic linking offers significant performance benefits in Java applications by enabling resource optimization and promoting modularity.

These benefits manifest in several ways, including reduced startup times, minimized memory footprints, and increased flexibility for managing complex applications. Through efficient resource management, dynamic linking optimizes startup times by loading classes on demand.

By adopting modular design principles, developers can achieve higher code reusability and maintainability.

Imagine a large enterprise application, creaking under the weight of its own monolithic structure. Startup times are agonizingly slow, resource consumption is exorbitant, and even minor updates require redeploying the entire system. This was the reality for many organizations until they discovered the power of Java Dynamic Linking.

But how exactly does dynamic linking solve this problem? Let’s begin with the core concept:

Defining Dynamic Linking in Java

In essence, Dynamic Linking is a mechanism where the resolution of symbolic references (like class dependencies) is deferred until runtime. Unlike static linking, where all dependencies are resolved at compile time, dynamic linking allows the Java Virtual Machine (JVM) to load classes and resources only when they are actually needed.

This on-demand loading capability forms the bedrock of its performance advantages.

Consider it like this: instead of packing everything into one massive suitcase before a trip, you only pack the essentials and then acquire other items as you need them during your journey. This analogy highlights the resourcefulness of dynamic linking.

The Performance Promise: A Thesis

This article argues that dynamic linking offers significant performance benefits in Java applications by enabling resource optimization and promoting modularity.

These benefits manifest in several ways, including reduced startup times, minimized memory footprints, and increased flexibility for managing complex applications. Through efficient resource management, dynamic linking optimizes startup times by loading classes on demand.

By adopting modular design principles, developers can achieve higher code reusability and maintainability.

Demystifying Dynamic Linking within the JVM

The true magic of Java Dynamic Linking unfolds within the intricate architecture of the Java Virtual Machine (JVM). It’s not just a simple toggle; it’s a carefully orchestrated process involving various components working in harmony. Understanding this inner workings provides critical insight into how dynamic linking achieves its performance gains.

A Deep Dive into the JVM’s Dynamic Linking Mechanism

At its core, the JVM’s dynamic linking process hinges on resolving symbolic references at runtime.

When a Java class refers to another class, method, or field, these references are initially stored as symbolic names.

The JVM only resolves these symbolic references into actual memory addresses when the code is executed and the referenced component is first needed. This "on-demand" resolution is the key differentiator from static linking.

The resolution process involves searching for the class or resource in the appropriate classpath, loading it into memory (if it’s not already there), and then linking it to the requesting class. This late-binding approach allows the application to start quickly, as it only loads the classes that are immediately required.

Class Loaders: The Architects of Dynamic Loading

Class Loaders are fundamental to dynamic linking in Java. They are responsible for locating, loading, and defining classes during runtime.

Each class loader operates within a hierarchical structure, adhering to the delegation principle. When a class loader receives a request to load a class, it first delegates the request to its parent class loader.

This delegation continues up the hierarchy until it reaches the bootstrap class loader, which is responsible for loading core Java classes.

If a parent class loader can load the class, the request is satisfied. If not, the child class loader attempts to load the class itself. This prevents classes from being loaded multiple times and ensures consistency across the application.

There are three primary types of Class Loaders in Java:

- Bootstrap Class Loader: Loads core Java libraries from the

jre/libdirectory. - Extension Class Loader: Loads classes from the

jre/lib/extdirectory or any other directory specified by thejava.ext.dirssystem property. - System Class Loader (Application Class Loader): Loads classes from the application’s classpath, which is specified by the

-classpathor-cpcommand-line option or theCLASSPATHenvironment variable.

Custom class loaders can also be defined, enabling developers to have fine-grained control over how classes are loaded, particularly in scenarios involving plugin architectures or dynamically generated code.

The JRE’s Role: Providing the Foundation

The Java Runtime Environment (JRE) is the bedrock upon which dynamic linking operates. It provides the necessary libraries, the JVM, and other components required to run Java applications.

The JRE’s core libraries are dynamically linked, enabling applications to utilize a wide range of functionalities without having to include them directly in their own code. This reduces the size of the application and promotes code reuse.

Furthermore, the JRE’s dynamic linking capabilities allow it to adapt to different environments and configurations. It can load native libraries and other resources as needed, enabling Java applications to interact with the underlying operating system and hardware.

In essence, the JRE provides the environment and the tools that make dynamic class loading possible. Without the JRE, the JVM would not be able to locate, load, and link classes during runtime, rendering dynamic linking ineffective.

Understanding the interplay between the JVM, Class Loaders, and the JRE is crucial for grasping the power and flexibility of dynamic linking in Java. It is a sophisticated mechanism that enables developers to build modular, efficient, and adaptable applications.

The Multi-faceted Benefits of Dynamic Linking

The shift to dynamic linking in Java represents more than just a change in how code is loaded; it’s a fundamental shift in architectural thinking that unlocks a cascade of benefits. This includes significant improvements in performance, a natural pathway to modular application design, and more efficient memory management. Let’s examine these facets in greater detail.

Performance Optimization: Startup Time and Memory Footprint

One of the most immediate and noticeable advantages of dynamic linking is its impact on application startup time. Instead of loading all classes at the beginning, only the absolutely necessary classes are loaded initially. This lazy loading approach dramatically reduces the amount of time it takes for the application to become responsive.

This is particularly critical in large enterprise applications where lengthy startup times can be a major source of frustration for users.

Furthermore, dynamic linking minimizes the memory footprint of an application. By deferring the loading of classes until they are actually needed, the application consumes only the memory resources it requires at any given moment.

This contrasts sharply with static linking, where the entire application, along with all its dependencies, is loaded into memory regardless of whether those dependencies are actively used.

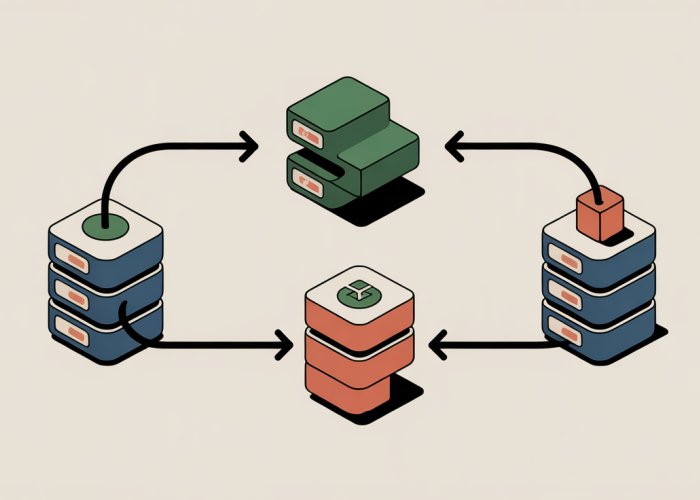

Embracing Modularity: Independent Modules and Plugins

Dynamic linking is a key enabler of modular application design. It allows developers to break down large, monolithic applications into smaller, independent modules or plugins.

Each module can be developed, tested, and deployed independently, promoting code reusability and simplifying maintenance.

This modularity is particularly beneficial in complex systems where different teams may be responsible for different parts of the application. Dynamic linking allows these teams to work in parallel without stepping on each other’s toes.

Furthermore, it facilitates the creation of plugin-based architectures, where new functionality can be added to an application without requiring a complete redeployment.

This makes applications more extensible and adaptable to changing business needs.

Memory Management: Efficient Resource Utilization

The dynamic nature of class loading and unloading translates into more efficient memory management. When a class is no longer needed, it can be unloaded from memory, freeing up resources for other parts of the application.

This is particularly important in long-running applications where memory leaks can accumulate over time, leading to performance degradation or even application crashes.

Dynamic linking allows for more granular control over memory usage, ensuring that resources are used efficiently and effectively.

Lazy Loading: Accelerating Application Startup

Lazy loading, a direct consequence of dynamic linking, offers a substantial boost to application startup times. It ensures that classes are loaded only when they are first referenced.

This contrasts sharply with static linking, where all classes are loaded at startup, regardless of whether they are immediately needed.

By deferring the loading of non-essential classes, lazy loading minimizes the initial overhead and allows the application to become responsive much faster.

This is particularly beneficial in applications with complex dependencies or large codebases.

In summary, the performance promise of dynamic linking is not just theoretical. It translates to tangible benefits in terms of reduced startup times, minimized memory footprints, and increased flexibility for managing complex applications. By enabling resource optimization and promoting modularity, dynamic linking empowers developers to build more efficient, maintainable, and adaptable Java applications.

Dynamic Linking and the Role of Shared Libraries

The Java ecosystem, while predominantly focused on platform independence, occasionally necessitates interaction with platform-specific resources. This is where shared libraries, and the mechanisms to access them, become crucial. Shared libraries are precompiled code modules that can be loaded into memory by multiple programs simultaneously, saving disk space and memory. But how do these libraries, typically written in languages like C or C++, integrate with the Java environment? And what role does dynamic linking play in facilitating this integration?

Shared Libraries in Java Applications

Shared libraries, bearing extensions like .dll (Windows), .so (Linux), and .dylib (macOS), are fundamentally external to the Java Virtual Machine (JVM). They contain native code—code compiled directly for the underlying hardware and operating system. Java applications leverage these libraries to access functionality not readily available or efficiently implemented in pure Java. This might include utilizing specific hardware features, interfacing with legacy systems, or employing high-performance algorithms optimized in C/C++.

The bridge between Java and these native libraries is the Java Native Interface (JNI). JNI allows Java code to call functions written in other programming languages, and vice versa. This involves defining native methods in Java, which are then implemented in a shared library.

The dynamic linking process ensures that these native methods are linked to the Java application only when they are first invoked during runtime. This on-demand linking avoids loading the native code unnecessarily at application startup, contributing to faster startup times and reduced memory consumption.

Java Native Interface (JNI)

JNI is the cornerstone for integrating Java applications with native code residing in shared libraries. It defines a standard interface for Java code to interact with native libraries, enabling functionalities that are not natively supported by Java.

To use JNI, developers must first declare a native method in their Java class. This declaration informs the JVM that the method’s implementation resides in a native library.

Next, a corresponding implementation is created in a native language (usually C or C++), following the JNI specification for function naming and argument handling.

Finally, the native code is compiled into a shared library. At runtime, when the Java application calls the native method, the JVM uses dynamic linking to load the shared library and execute the native code.

Benefits of JNI

The primary benefit of JNI is the ability to access platform-specific features or leverage existing native codebases from Java applications. This enables tasks like:

- Hardware Access: Interacting directly with hardware devices (e.g., sensors, GPUs).

- Legacy Code Integration: Utilizing existing libraries written in languages like C or C++.

- Performance Optimization: Implementing performance-critical sections of code in native languages for increased speed.

Drawbacks and Considerations of JNI

While JNI offers significant advantages, it also introduces complexities and potential drawbacks:

- Platform Dependence: Native code is inherently platform-dependent, negating Java’s "write once, run anywhere" principle. The developer is responsible to ensure that the correct version of the

.dll,.soor.dylibis provided for each deployment environment. - Increased Complexity: JNI development is more complex than pure Java development, requiring expertise in both Java and native languages.

- Security Risks: Improperly handled JNI code can introduce security vulnerabilities, such as buffer overflows or memory corruption. Thorough security auditing is crucial.

- Performance Overhead: Calls between Java and native code involve a performance overhead due to the necessary data conversion and context switching.

- Memory Management: Developers are responsible for manual memory management in the native code, which can lead to memory leaks or other memory-related issues if not handled carefully. Memory leaks can severely impact application stability and performance.

In conclusion, while dynamic linking facilitates the integration of shared libraries and native code through JNI, developers must carefully weigh the benefits against the potential drawbacks. A thorough understanding of both Java and native technologies, along with robust testing and security practices, is essential for successful JNI development. Dynamic linking enhances Java’s flexibility, but responsibility for managing its complexities rests with the developer.

Shared libraries, accessible through JNI, provide a crucial bridge to native code. However, the story of dynamic linking and optimization within Java doesn’t end there. The HotSpot VM, Java’s most widely used virtual machine, takes dynamic linking to another level, leveraging it for sophisticated adaptive optimization strategies.

HotSpot VM: Dynamic Linking and Adaptive Optimization

The HotSpot Virtual Machine isn’t just a passive executor of bytecode; it’s a dynamic environment that constantly analyzes and optimizes running code. Dynamic linking plays a pivotal role in this process, enabling the VM to make informed decisions about optimization strategies based on runtime behavior.

The Role of Dynamic Linking in Adaptive Compilation

Adaptive compilation, also known as dynamic compilation or just-in-time (JIT) compilation, is a key feature of the HotSpot VM. It involves compiling frequently executed parts of the bytecode into native machine code during runtime.

This contrasts with static compilation, where code is compiled ahead of time. Dynamic linking informs the JIT compiler about the actual classes and methods being used, allowing it to tailor the generated machine code for specific runtime scenarios.

Profiling and Hotspot’s Optimization Decisions

The HotSpot VM employs a technique called profiling to identify "hot spots" in the code—areas that are executed repeatedly. The dynamic linking process provides crucial information for this profiling activity.

By observing which classes are loaded and which methods are called most frequently, the VM can prioritize these hot spots for JIT compilation. This ensures that optimization efforts are focused on the code sections that will yield the greatest performance improvements.

Polymorphism and Dynamic Linking’s Influence

Java’s support for polymorphism, where a single method call can invoke different implementations depending on the object’s type, presents a challenge for static compilation. Dynamic linking, however, allows the HotSpot VM to resolve the actual method being called at runtime.

This information is used to perform method inlining, a powerful optimization technique where the code of the called method is inserted directly into the calling method, eliminating the overhead of a method call. Dynamic linking makes method inlining more effective by providing accurate type information.

Deoptimization: Adapting to Changing Conditions

One of the remarkable features of the HotSpot VM is its ability to deoptimize code. If the assumptions made during JIT compilation become invalid (e.g., a previously uncommon code path becomes frequently executed), the VM can revert the optimized code back to its original bytecode form.

Dynamic linking makes deoptimization possible by maintaining a mapping between the optimized machine code and the original bytecode. This allows the VM to seamlessly switch back to the unoptimized version when necessary, ensuring the application’s continued correct execution.

Class Data Sharing and Reduced Startup Time

The HotSpot VM also utilizes class data sharing (CDS) to reduce startup time. CDS involves pre-processing core classes and storing them in a shared archive. During startup, multiple JVM instances can map this archive into memory, avoiding the need to load and verify these classes individually.

While not directly part of dynamic linking, CDS relies on the same principles of on-demand loading and resource sharing that characterize dynamic linking, contributing to a faster and more efficient startup process.

Having explored the theoretical underpinnings and optimization mechanisms, let’s shift our focus to the practical realm. Dynamic linking isn’t merely a theoretical concept; it’s a powerful tool that can significantly impact the performance and architecture of real-world Java applications. By examining specific scenarios and providing concrete code examples, we can illustrate how dynamic linking can be leveraged to build more efficient and maintainable systems.

Real-World Applications and Practical Examples

Dynamic linking shines in scenarios demanding flexibility, scalability, and efficient resource management. Let’s examine a few key areas where it provides substantial benefits: large enterprise applications and plugin-based systems.

Enterprise Applications: Modular Development and Reduced Footprint

Large enterprise applications are often composed of numerous modules and libraries, each responsible for a specific set of functionalities. Using static linking in such environments can lead to bloated applications with excessively long startup times and a substantial memory footprint.

Dynamic linking, in contrast, enables a modular approach, where components are loaded only when needed. This significantly reduces startup time, as the application doesn’t need to load all modules at once. It also optimizes memory usage, as only the required modules are loaded into memory at any given time.

Furthermore, dynamic linking facilitates independent development and deployment of modules. Teams can work on different modules concurrently without impacting the entire application. This streamlines the development process and allows for more frequent and incremental releases.

Plugin-Based Systems: Extensibility and Customization

Plugin-based systems are designed to be extensible and customizable, allowing users to add or remove functionalities without modifying the core application. Dynamic linking is fundamental to this architecture, as it enables the dynamic loading and unloading of plugin modules.

Consider an image editing application that supports various image formats through plugins. Instead of including support for all possible formats in the core application, the application can load the necessary plugin dynamically when the user opens a specific file format.

This approach offers several advantages:

- Reduced core application size: The core application only contains the essential functionalities.

- Extensibility: New image formats can be supported simply by adding new plugins.

- Isolation: Plugins can be developed and deployed independently.

Code Example: Dynamic Class Loading

Let’s examine a simplified code example that demonstrates the dynamic loading of a class:

public class DynamicLoader {

public static void main(String[] args) {

try {

// Load the class dynamically

Class<?> loadedClass = Class.forName("com.example.MyPlugin");

// Create an instance of the class

Object pluginInstance = loadedClass.getDeclaredConstructor().newInstance();

// Invoke a method on the instance

Method executeMethod = loadedClass.getMethod("execute");

executeMethod.invoke(pluginInstance);

} catch (Exception e) {

e.printStackTrace();

}

}

}

In this example, Class.forName() dynamically loads the class com.example.MyPlugin during runtime. The code then creates an instance of the loaded class and invokes its execute() method. This demonstrates how dynamic linking allows you to load and utilize classes without knowing their implementation at compile time.

This example is just a starting point. In real-world scenarios, dynamic class loading is often combined with interfaces and reflection to achieve greater flexibility and control over the loaded modules.

Practical Considerations

While dynamic linking offers significant advantages, it also introduces some complexity. Proper error handling and security considerations are paramount when implementing dynamic class loading. Specifically, ensure that the class being loaded comes from a trusted source, to prevent malicious code from being executed.

Having illustrated the tangible benefits and practical implementations of dynamic linking, particularly in the context of enterprise applications and plugin-based systems, it is vital to now turn our attention to the crucial aspect of responsible and effective implementation. Dynamic linking, while powerful, introduces its own set of complexities that developers must carefully navigate. Understanding and mitigating these challenges is key to unlocking the full potential of dynamic linking while avoiding common pitfalls.

Best Practices and Key Considerations for Implementation

Implementing dynamic linking effectively requires a thoughtful approach, addressing potential challenges related to dependency management, versioning conflicts, and, critically, security. Ignoring these considerations can lead to unstable applications, difficult-to-debug errors, and potential security vulnerabilities. Let’s delve into the best practices and key considerations to ensure a robust and secure implementation.

Navigating the Labyrinth: Dependency Management

One of the primary challenges in dynamic linking is managing dependencies. When classes are loaded at runtime, the application must be able to locate and load all required dependencies. Incorrectly managed dependencies can result in ClassNotFoundException or NoClassDefFoundError errors, leading to application failure.

The Classpath Conundrum

The classpath plays a crucial role in locating classes at runtime. It’s essential to ensure that the classpath is correctly configured to include all necessary JAR files or directories containing the dynamically loaded classes. This can become particularly complex in large applications with numerous dependencies.

Best Practice: Employ a well-defined and consistent classpath strategy across all environments (development, testing, and production). Use environment variables or configuration files to manage classpath settings, making them easily adaptable to different deployment scenarios.

Leveraging Dependency Management Tools

Modern dependency management tools like Maven or Gradle can significantly simplify the process of managing dependencies in dynamic linking scenarios. These tools allow you to define dependencies explicitly and automatically resolve transitive dependencies.

Best Practice: Integrate Maven or Gradle into your build process. These tools can handle dependency resolution, ensuring that all required libraries are available at runtime. They also facilitate version management and conflict resolution.

Taming the Beast: Versioning Conflicts

Versioning conflicts arise when different modules or libraries require different versions of the same dependency. This can lead to unpredictable behavior and runtime errors. Dynamic linking exacerbates this issue, as different versions of a class may be loaded at different times.

The Shadows of Class Loader Isolation

Employing multiple class loaders can help isolate different modules and prevent versioning conflicts. Each class loader can be configured to load a specific version of a library, preventing conflicts between modules.

Best Practice: Use custom class loaders to isolate different modules or plugins. This ensures that each module has its own isolated dependency environment, preventing versioning conflicts. Frameworks like OSGi are specifically designed to address these types of issues.

Semantic Versioning: A beacon of Light

Adhering to semantic versioning principles helps to communicate the compatibility of different versions of a library. This allows developers to make informed decisions about which versions to use and to avoid potential conflicts.

Best Practice: Adopt semantic versioning for your libraries. This provides clear information about the compatibility of different versions, helping to prevent conflicts. Clearly document any breaking changes between versions.

Guarding the Gates: Security Considerations

Dynamically loading code introduces significant security risks. Untrusted code can potentially compromise the entire application, leading to data breaches or other malicious activities. It’s imperative to implement robust security measures to mitigate these risks.

The Fortress of Code Signing

Code signing provides a mechanism to verify the authenticity and integrity of dynamically loaded code. By signing JAR files with a trusted certificate, you can ensure that the code has not been tampered with and that it originates from a trusted source.

Best Practice: Always sign dynamically loaded code with a trusted certificate. Verify the signature before loading the code to ensure its authenticity and integrity.

The Walls of Sandboxing

Sandboxing restricts the access of dynamically loaded code to system resources, preventing it from performing unauthorized actions. This can be achieved by using security managers or other isolation mechanisms.

Best Practice: Implement a robust sandboxing mechanism to restrict the access of dynamically loaded code to system resources. Define a clear security policy that limits the actions that dynamically loaded code can perform.

The Vigilance of Input Validation

Validate all inputs received from dynamically loaded code to prevent injection attacks or other vulnerabilities. Carefully scrutinize any data passed to the loaded code, ensuring that it conforms to expected formats and values.

Best Practice: Thoroughly validate all inputs received from dynamically loaded code. Use parameterized queries or other techniques to prevent injection attacks. Employ input sanitization to remove potentially harmful characters or code.

By diligently addressing dependency management, versioning conflicts, and security considerations, developers can harness the power of dynamic linking to create more flexible, scalable, and maintainable Java applications, while mitigating the inherent risks associated with dynamic code loading.

FAQs About Java Dynamic Linking and Performance

Got questions about Java dynamic linking and how it can boost your application’s speed? Here are some common inquiries and clear answers to help you understand the concept better.

What exactly is Java dynamic linking?

Java dynamic linking is the process of linking a class or library to a Java Virtual Machine (JVM) at runtime, rather than at compile time. This means the code for a library isn’t loaded until it’s actually needed, which can save memory and improve startup time.

How does dynamic linking in Java improve performance?

Dynamic linking improves performance in Java by reducing the initial overhead of loading all the required classes and libraries. Only the necessary components are loaded when they are first used, leading to faster application startup and reduced memory footprint, especially beneficial for large applications.

When is Java dynamic linking most useful?

Java dynamic linking is particularly useful in situations where not all code is required at startup or where libraries might be updated frequently. Plugins, optional features, and modular applications benefit greatly from dynamic linking in Java.

Are there any potential downsides to using Java dynamic linking?

While beneficial, java dynamic linking can introduce a small delay the first time a dynamically linked class or library is accessed. This is because the JVM needs to locate, load, and link the code. However, this delay is usually insignificant compared to the overall performance gains.

Alright, I hope you found this exploration of java dynamic linking useful for boosting your app’s performance. Now, get out there and put those new skills to work!