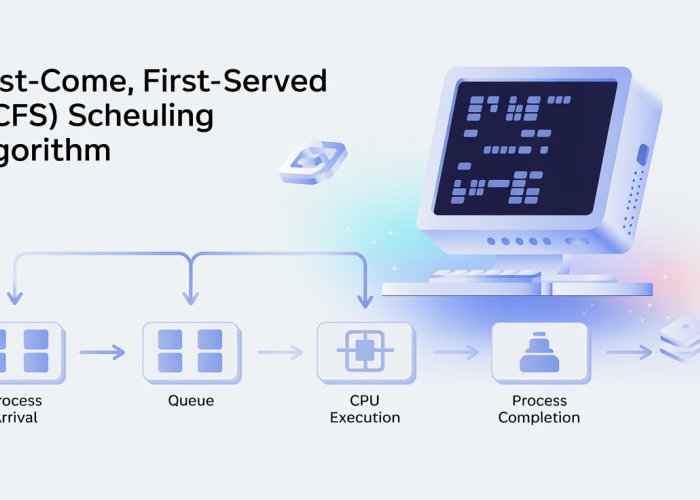

First-Come, First-Served (FCFS), a fundamental concept in operating systems, governs process scheduling, especially concerning what is fcfs and how it operates. Understanding FCFS is crucial for optimizing resource allocation, as many scheduling algorithms are built upon or compared against its principles. The efficiency of FCFS often depends on the order of task arrival, influencing overall system throughput and responsiveness, aspects carefully managed by entities like the Project Management Institute.

In the intricate world of operating systems, managing the central processing unit (CPU) efficiently is paramount. This is where CPU scheduling comes into play, orchestrating the execution of multiple processes to ensure optimal system performance.

At the heart of this orchestration lies a variety of scheduling algorithms, each with its own unique approach.

Among these, the First-Come, First-Served (FCFS) algorithm stands out as a fundamental and intuitive method.

CPU Scheduling: The Foundation of Operating System Efficiency

Operating systems are designed to handle numerous tasks concurrently. This creates a need for a mechanism that determines which process gets access to the CPU and for how long.

This mechanism is known as CPU scheduling, and it is a critical component of any modern operating system.

Effective CPU scheduling aims to maximize CPU utilization, minimize response time, and ensure fairness among competing processes.

FCFS: A Simple Yet Foundational Algorithm

The First-Come, First-Served (FCFS) algorithm is perhaps the simplest CPU scheduling algorithm.

As its name suggests, FCFS allocates the CPU to processes in the order they arrive in the ready queue.

This straightforward approach makes it easy to understand and implement.

FCFS serves as a valuable building block for grasping more complex scheduling techniques.

Guide Purpose and Scope

This guide aims to provide a comprehensive understanding of the FCFS algorithm.

We will delve into its mechanics, exploring how it works step-by-step with illustrative examples.

Furthermore, we will critically evaluate its advantages and disadvantages, shedding light on its strengths and limitations.

By the end of this guide, you will have a solid grasp of the FCFS algorithm and its role in CPU scheduling.

In essence, FCFS provides a transparent and easily understandable method for managing processes. Its simplicity, however, is intertwined with specific operational principles that determine its behavior within the system. Let’s dissect the core tenets that define how FCFS schedules processes.

FCFS Demystified: The Core Principles

At its heart, the First-Come, First-Served (FCFS) algorithm operates on a remarkably straightforward principle: the process that requests the CPU first is allocated the CPU first. This seemingly simple rule has profound implications for how processes are managed and executed within the operating system.

First Request, First Serve

The fundamental tenet of FCFS is its adherence to arrival order. Processes are treated like customers waiting in a line; whoever arrives first gets served first.

This means that the sequence in which processes enter the ready queue directly dictates the order in which they gain access to the CPU.

There are no complex priority calculations or pre-emptive measures involved, just a linear progression based on arrival time.

The Queue’s Decisive Role

The queue is an essential element in FCFS scheduling.

The ready queue acts as a holding area for processes waiting for their turn to use the CPU.

As processes arrive, they are added to the rear of the queue.

The FCFS scheduler then selects processes from the front of the queue, granting them CPU access in the order they were enqueued.

The queue ensures that processes are managed in a structured manner, reinforcing the first-come, first-served principle.

Non-Preemptive Nature: Run to Completion

One of the most significant characteristics of FCFS is that it is a non-preemptive algorithm.

Once a process gains access to the CPU, it retains control until it either completes its execution or voluntarily relinquishes the CPU by requesting I/O or terminating.

This means that a long-running process can effectively block other shorter processes from executing, even if those shorter processes arrive earlier.

This non-preemptive nature can lead to performance bottlenecks in certain scenarios, a topic we will explore further when examining the algorithm’s limitations.

One crucial aspect remains: understanding how FCFS translates from theory into tangible actions. It’s time to delve into the practical application of the FCFS algorithm, examining its step-by-step operation, and solidifying our comprehension through a detailed example.

FCFS in Action: A Step-by-Step Walkthrough

To truly grasp the essence of the First-Come, First-Served (FCFS) algorithm, we need to see it in action. Let’s dissect its practical implementation through a step-by-step walkthrough, complete with a comprehensive example that illuminates its operational mechanics.

Understanding the Input Parameters: Arrival Time and Burst Time

At its core, the FCFS algorithm relies on two key input parameters for each process: Arrival Time and Burst Time. These parameters are fundamental to the scheduling decision.

-

Arrival Time: This denotes the time at which a process enters the ready queue, signifying its request for CPU execution. It’s the process’s "entry ticket" into the scheduling process.

-

Burst Time: This represents the total CPU time a process requires to complete its execution. It’s essentially the "duration" of the process’s CPU usage.

The Scheduler’s Role: Selecting Processes Based on Arrival Order

The FCFS scheduler operates on a simple yet decisive principle: arrival order. The scheduler meticulously examines the ready queue, prioritizing processes based solely on their arrival times.

The process that has been waiting the longest—the one at the front of the queue—is selected for CPU allocation. This straightforward approach ensures a fair and predictable execution sequence.

Visualizing the Process: The Gantt Chart

To effectively illustrate the scheduling process, we often employ a Gantt Chart. This visual tool provides a timeline representation of process execution.

The Gantt Chart clearly depicts the sequence in which processes are executed, the start and end times for each process, and any periods of CPU idle time. It’s a powerful tool for understanding and analyzing the FCFS scheduling behavior.

Let’s consider a scenario with three processes: P1, P2, and P3.

| Process | Arrival Time | Burst Time |

|---|---|---|

| P1 | 0 | 8 |

| P2 | 1 | 4 |

| P3 | 2 | 9 |

The Gantt Chart would look like this:

0 8 12 21

|-----|-------|---------|

P1 P2 P3

P1 arrives first (at time 0) and runs for 8 units of time. P2 arrives at time 1 but must wait for P1 to finish. It then runs for 4 units. Finally, P3 arrives at time 2, waits for P1 and P2, and runs for 9 units.

Quantifying Performance: Waiting Time and Turnaround Time

To evaluate the performance of the FCFS algorithm, we calculate two key metrics: Waiting Time and Turnaround Time. These metrics provide insights into the efficiency and responsiveness of the scheduling process.

-

Waiting Time: This is the time a process spends waiting in the ready queue before it gets the CPU. It reflects the delay experienced by the process due to other processes being executed ahead of it.

-

Turnaround Time: This is the total time taken from the process’s arrival to its completion. It encompasses both the waiting time and the burst time.

- Turnaround Time = Completion Time – Arrival Time

- Waiting Time = Turnaround Time – Burst Time

For our example:

- P1: Waiting Time = 0, Turnaround Time = 8

- P2: Waiting Time = 8 – 1 = 7, Turnaround Time = 12 – 1 = 11

- P3: Waiting Time = 12 – 2 = 10, Turnaround Time = 21 – 2 = 19

Average Waiting Time: (0 + 7 + 10) / 3 = 5.67

Average Turnaround Time: (8 + 11 + 19) / 3 = 12.67

Comprehensive Example: Bringing it All Together

Let’s solidify our understanding with a more complex example. Consider the following processes with their respective arrival and burst times:

| Process | Arrival Time | Burst Time |

|---|---|---|

| P1 | 0 | 5 |

| P2 | 1 | 3 |

| P3 | 2 | 8 |

| P4 | 3 | 6 |

Using the FCFS algorithm:

-

P1 arrives first and is executed immediately.

-

P2 arrives at time 1 but has to wait until P1 completes at time 5.

-

P3 arrives at time 2, waiting for both P1 and P2.

-

P4 arrives at time 3, waiting for P1, P2, and P3.

The Gantt Chart would be:

0 5 8 16 22

|----|-----|-------|--------|

P1 P2 P3 P4

Calculating Waiting and Turnaround Times:

- P1: Waiting Time = 0, Turnaround Time = 5

- P2: Waiting Time = 5 – 1 = 4, Turnaround Time = 8 – 1 = 7

- P3: Waiting Time = 8 – 2 = 6, Turnaround Time = 16 – 2 = 14

- P4: Waiting Time = 16 – 3 = 13, Turnaround Time = 22 – 3 = 19

Average Waiting Time: (0 + 4 + 6 + 13) / 4 = 5.75

Average Turnaround Time: (5 + 7 + 14 + 19) / 4 = 11.25

This example clearly demonstrates how the FCFS algorithm operates in practice. While simple in its execution, it highlights the potential for longer waiting times, particularly for processes arriving later in the queue, even if their burst times are shorter.

One step at a time, we’ve unpacked the inner workings of FCFS, tracing its operational flow and witnessing its application in a practical scenario. Having illuminated the ‘how’ of FCFS, it’s only natural to turn our attention to the ‘why’. What makes this seemingly basic algorithm appealing? Where does it shine amidst the array of scheduling options?

The Upsides of FCFS: Simplicity and Fairness

Despite its limitations, the First-Come, First-Served (FCFS) algorithm possesses inherent advantages that make it a valuable concept in the study of operating systems. Its strength lies primarily in two core aspects: simplicity and fairness.

Unmatched Simplicity: The Key to Understanding

The FCFS algorithm is remarkably simple to understand and implement. Its logic follows a straightforward principle: the process that requests the CPU first is allocated the CPU first.

This ease of comprehension translates into ease of implementation. There are no complex calculations or priority assignments to manage.

The scheduler simply maintains a queue, and processes are served in the order they arrive. This simplicity makes it an ideal starting point for learning about CPU scheduling algorithms.

It also reduces the overhead associated with scheduling, as the algorithm requires minimal processing power to make decisions. This can be especially beneficial in systems with limited resources.

Inherent Fairness: Equal Opportunity for All

FCFS offers a basic level of fairness. Every process that enters the ready queue is guaranteed a chance to run eventually.

There are no processes that are perpetually starved of CPU time due to higher priority assignments or other factors. This fairness can be particularly important in systems where it’s crucial to ensure that all processes receive some amount of processing time.

However, it’s important to note that this fairness doesn’t necessarily translate to optimal performance. The convoy effect, which we will discuss later, can still lead to longer waiting times for some processes.

FCFS: A Foundation for Further Exploration

While FCFS may not be the most sophisticated scheduling algorithm, its simplicity and fairness make it a valuable tool for understanding the fundamental principles of CPU scheduling.

It serves as a solid foundation upon which more complex algorithms can be built, and its ease of implementation makes it a practical choice in certain situations.

One step at a time, we’ve unpacked the inner workings of FCFS, tracing its operational flow and witnessing its application in a practical scenario. Having illuminated the ‘how’ of FCFS, it’s only natural to turn our attention to the ‘why’. What makes this seemingly basic algorithm appealing? Where does it shine amidst the array of scheduling options?

The Downsides of FCFS: Addressing the Limitations

While FCFS boasts simplicity and a certain level of inherent fairness, it’s crucial to acknowledge its limitations. These drawbacks can significantly impact system performance, especially in certain scenarios. Understanding these shortcomings is vital for making informed decisions about CPU scheduling.

The Perilous "Convoy Effect"

One of the most significant drawbacks of FCFS is the convoy effect. This phenomenon occurs when a long-running process monopolizes the CPU.

This causes all shorter processes behind it to wait for an extended period. Imagine a convoy of vehicles on a highway where a slow-moving truck dictates the pace for everyone behind it.

The result is increased average waiting times and reduced overall system throughput. Shorter, quicker tasks are unnecessarily delayed by a single, resource-intensive job.

Inefficiency in Time-Sharing Environments

FCFS is inherently inefficient for time-sharing systems. Time-sharing operating systems aim to provide interactive experiences for users by rapidly switching between processes.

FCFS’s non-preemptive nature prevents this rapid context switching. Once a process starts, it holds the CPU until completion, regardless of other processes waiting.

This can lead to noticeable delays and a sluggish user experience. Users may experience unacceptable lags when interacting with applications.

Unsuitability for Priority-Based Systems

FCFS completely disregards process priorities. All processes are treated equally, regardless of their importance or urgency.

In systems where certain tasks are more critical than others (e.g., real-time systems), FCFS is simply not a viable option. High-priority tasks can be stuck waiting behind low-priority ones.

This can have serious consequences, potentially leading to missed deadlines or system failures. Scenarios requiring prioritization are completely incompatible with the FCFS paradigm.

One step at a time, we’ve unpacked the inner workings of FCFS, tracing its operational flow and witnessing its application in a practical scenario. Having illuminated the "how" of FCFS, it’s only natural to turn our attention to the "why". What makes this seemingly basic algorithm appealing? Where does it shine amidst the array of scheduling options?

FCFS and Its Peers: A Comparative Analysis

While FCFS offers simplicity and ease of understanding, it doesn’t exist in a vacuum. A multitude of other CPU scheduling algorithms strive to optimize system performance based on various criteria. Comparing FCFS with these alternatives helps contextualize its strengths and weaknesses, allowing for a more informed decision about its applicability.

FCFS vs. Other Scheduling Algorithms

Several scheduling algorithms offer distinct approaches to process management, each with its own set of trade-offs. Let’s briefly examine a few:

-

Shortest Job First (SJF): SJF prioritizes processes with the shortest burst times. This typically leads to lower average waiting times compared to FCFS, particularly when long processes are present. However, SJF requires knowing (or predicting) burst times in advance, which is often impractical. It can also lead to starvation for longer processes.

-

Priority Scheduling: Priority scheduling assigns a priority to each process, and the CPU is allocated to the process with the highest priority. This allows critical tasks to be executed quickly, but it can also lead to starvation for lower-priority processes if not implemented carefully (aging can be used to mitigate this). FCFS, in contrast, treats all processes equally, regardless of their importance.

-

Round Robin (RR): Round Robin is designed for time-sharing systems. It assigns a fixed time slice (quantum) to each process, and the CPU switches between processes after each quantum. This ensures that all processes get a fair share of the CPU and prevents any single process from monopolizing it. FCFS’s non-preemptive nature makes it unsuitable for time-sharing, as a long process can block all other processes.

Situations Where FCFS Remains a Viable Choice

Despite its limitations, FCFS can still be a suitable scheduling algorithm in specific situations:

-

Simple Systems with Low Process Arrival Rates: If the system is relatively simple and the rate at which processes arrive is low, the convoy effect is less likely to be a major problem. In such cases, the simplicity of FCFS can outweigh its potential inefficiencies.

-

Batch Processing: FCFS can be effective for batch processing systems where a large number of jobs are submitted together and processed sequentially. In this scenario, fairness and predictability might be more important than minimizing average waiting time.

-

When Predictability Is Paramount: FCFS provides highly predictable execution. As processes are executed in their arrival order, the completion time of each process can be easily predicted. This can be valuable in applications where timing is critical.

-

As a Component in Hybrid Scheduling: FCFS can be used as part of a hybrid scheduling algorithm, where it is applied to a subset of processes or during specific time intervals. For example, a system might use priority scheduling for interactive tasks and FCFS for background jobs.

In conclusion, while more sophisticated scheduling algorithms often provide better overall performance, the simplicity and predictability of FCFS make it a viable choice in certain contexts. Understanding these contexts is crucial for making informed decisions about CPU scheduling in different environments.

FCFS Explained: Frequently Asked Questions

Here are some common questions about First-Come, First-Served (FCFS) scheduling, to help clarify how it works.

What are the biggest advantages of using FCFS?

The main advantage of FCFS is its simplicity. It’s easy to understand and implement, requiring minimal overhead. This makes it a good choice in situations where fairness isn’t a primary concern, but simplicity is. Also, what is FCFS but simple?

What are the disadvantages of FCFS scheduling?

FCFS can lead to the convoy effect, where a long process blocks shorter ones, increasing average waiting time. It’s also not preemptive, meaning a process keeps the CPU until it finishes, even if other high-priority tasks are waiting. What is FCFS good for if not preemptive scheduling?

How is FCFS different from other scheduling algorithms?

Unlike priority scheduling, FCFS doesn’t consider process importance. It also differs from Shortest Job First (SJF), which favors shorter processes. FCFS purely prioritizes arrival time, offering a fairer but potentially less efficient approach than algorithms that optimize for performance metrics like throughput. What is FCFS if not the least algorithm?

In what scenarios is FCFS most appropriate?

FCFS is best suited for batch processing systems where fairness is less important than ease of implementation. It can also be suitable for systems with relatively similar job lengths, mitigating the convoy effect. Consider what is FCFS and ask yourself if speed or fairness is more important.

So, hopefully, you’ve got a good handle on what is fcfs now! Go forth and conquer those queues, and remember – first in, first served! Thanks for sticking around!